Blog

Connect to GridDB Cloud with Local Development Environment (How to set up and use OpenVPN)

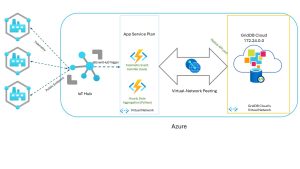

With GridDB Cloud 3.1, you can now access the native API of GridDB through Azure’s virtual peering network connection. The way it works is that

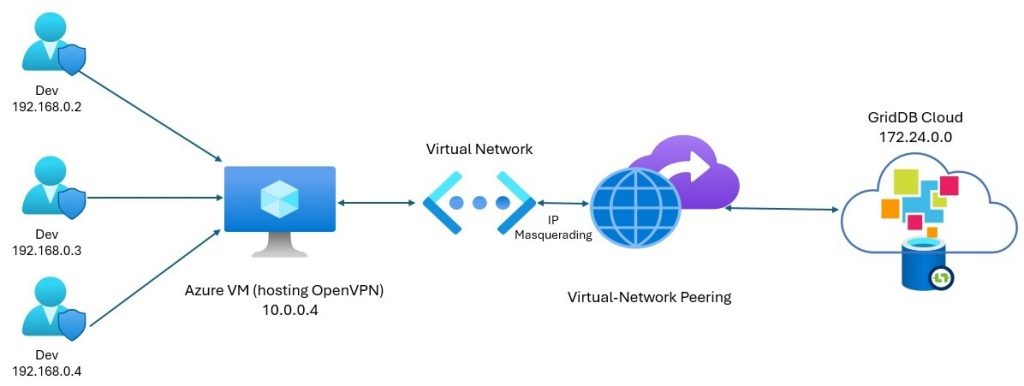

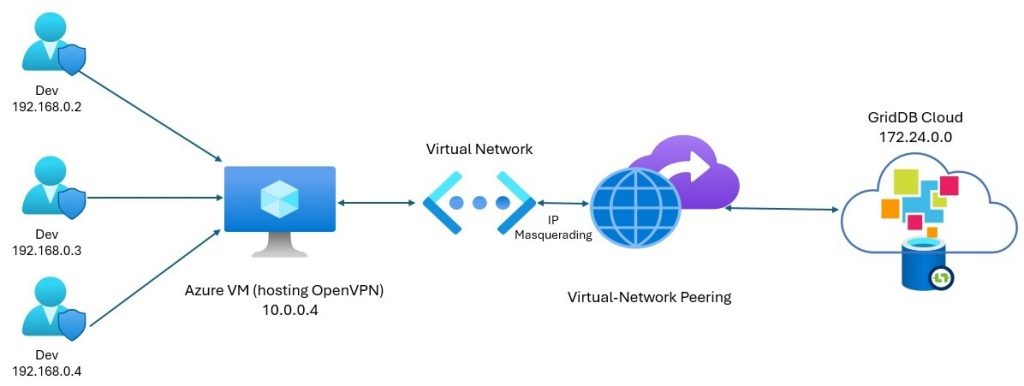

With GridDB Cloud 3.1, you can now access the native API of GridDB through Azure’s virtual peering network connection. The way it works is that that any virtual network (vnet) that you set up in your Azure cloud environment can set up what is called a peering connection, which allows two disparate sources to communicate through Azure’s vast resources. Through this, any virtual machine connected to that vnet can communicate and use the GridDB Cloud native APIs. We discuss at greater length here: https://griddb.net/en/blog/griddb-cloud-v3-1-how-to-use-the-native-apis-with-azures-vnet-peering/ In this article, we will build upon that idea and teach you how to set up a VPN which will allow you to access your GridDB Cloud through your local environment, meaning you can freely use GridDB with your existing application code as long as you connect to the VPN. Prereqs To fully utilize GridDB Cloud with native APIs in your local environment, you will need to, of course, have access to one of the paid GridDB Cloud instances: https://griddb.net/en/blog/griddb-cloud-azure-marketplace/. The nice thing, though, is that there are trial versions on the marketplace of one month so that you may try out GridDB Cloud’s features for free! You will also need to have set up a the vnet peering as described in the opening paragraphs of this article: GridDB Cloud v3.1 – How to Use the Native APIs with Azure’s VNET Peering If you have this set up, you should have the following in your Azure resource: GridDB Cloud (Pay As You Go) Azure Virtual Network with peering connection to GridDB Cloud A virtual machine connected to the above vnet Please note, that all of the above will incur some sort of cost on Azure (for example, an Azure VM b1 instance costs roughly ~$8/month if left on at all times). OpenVPN and IP Masquerading The way this set up works is through something called IP Masquerading which is “a process where one computer acts as an IP gateway for a network. All computers on the network send their IP packets through the gateway, which replaces the source IP address with its own address and then forwards it to the internet.” (https://www.linux.com/training-tutorials/what-ip-masquerading-and-when-it-use/). Essentially, it means that the traffic from your local machine will be intended for the GridDB Cloud IP, but instead will route through the VPN the DB will see the request coming and it will look like the request is coming from the local machine within the network (the vm) and accept it, and then make its response and push it back through the virtual network, through the virtual machine, and to your local env. So to get this running, you simply need to set up openvpn on the Azure virtual machine and then turn on the rule to do IP Masquerading and it will work. Install OpenVPN To install openvpn and the client certs for my machine, I used the guide from ubuntu: https://documentation.ubuntu.com/server/how-to/security/install-openvpn/. Through this guide, you will have OpenVPN installed on your Azure VM and then will have certs on your local machine that can connect to your VM. 1. Install OpenVPN & Easy-RSA sudo apt install openvpn easy-rsa 2. Set Up the PKI (Certificate Authority) sudo make-cadir /etc/openvpn/easy-rsa cd /etc/openvpn/easy-rsa/ Initialize PKI: ./easyrsa init-pki Build the CA: ./easyrsa build-ca 3. Generate Server Certificates Generate server key request: ./easyrsa gen-req myservername nopass Generate Diffie-Hellman params: ./easyrsa gen-dh Sign server certificate: ./easyrsa sign-req server myservername Copy required files into /etc/openvpn/: pki/dh.pem pki/ca.crt pki/issued/myservername.crt pki/private/myservername.key 4. Create Client Certificates Generate client key request: ./easyrsa gen-req myclient1 nopass Sign client cert: ./easyrsa sign-req client myclient1 Securely copy to the client machine: ca.crt (from earlier) myclient1.crt (inside /pki/issued) myclient1.key (inside /pki/private) 5. Configure the OpenVPN Server Copy sample config: sudo cp /usr/share/doc/openvpn/examples/sample-config-files/server.conf /etc/openvpn/myserver.conf Edit myserver.conf so these lines reference your certs: ca ca.crt cert myservername.crt key myservername.key dh dh.pem Generate TLS auth key: sudo openvpn –genkey secret ta.key Enable IP forwarding: Edit /etc/sysctl.conf, set: net.ipv4.ip_forward=1 Apply: sudo sysctl -p /etc/sysctl.conf Start the server: sudo systemctl start openvpn@myserver 6. Configure the Client Install OpenVPN: sudo apt install openvpn Copy sample config: sudo cp /usr/share/doc/openvpn/examples/sample-config-files/client.conf /etc/openvpn/ Place the files on client: ca.crt myclient1.crt myclient1.key ta.key Edit client.conf: client remote your.server.ip 1194 ca ca.crt cert myclient1.crt key myclient1.key tls-auth ta.key 1 Start client: sudo systemctl start openvpn@client 7. Quick Troubleshooting Check logs: sudo journalctl -u openvpn@myserver -xe sudo journalctl -u openvpn@client -xe Ensure: Ports match Protocol (udp/tcp) matches tls-auth index matches (0 on server, 1 on client) Same cipher, auth, and dev tun settings IP Masquerading As explained above, if you try it now, it simply won’t work, as the traffic will be routed to the GridDB DB from the IP on your local environment which is blocked due to security rules. But once this setting is turned on, it will work. Run the following command in your VM: sudo iptables -t nat -A POSTROUTING -o eth0 -j MASQUERADE. And that should do it! To ensure it works, you can of course run the sample code based on the previous blog. But before going through that effort, you can also simply try this: from the local environment (connected to the VPN), ping the IP of your GridDB Cloud DB (can be fetched from the notification provider URL in the GridDB Cloud DB UI home page) ping 172.26.30.68. And then on your Azure VM (the one hosting the VPN and that can also connect to GridDB Cloud) run: sudo tcpdump -i eth0 -n host 172.26.30.68. If successful, your pings to GridDB Cloud should be routed through the VM and be heading to its destination. Cool! To run the sample code, you can start by cloning the github repo and changing to the correct branch: $ git clone https://github.com/griddbnet/Blogs.git –branch griddb_cloud_paid_guide Then set your env variables for your GridDB Connection: export GRIDDB_NOTIFICATION_PROVIDER=”” export GRIDDB_CLUSTER_NAME=”” export GRIDDB_USERNAME=”” export GRIDDB_PASSWORD=”” export GRIDDB_DATABASE=”” And then from here, navigate to either the java or python dirs and run them! For java, do – $ mvn clean package – $ java -jar target/java-samples-1.0-SNAPSHOT-jar-with-dependencies.jar For python, after installing the python client, you can install the requirements text (python3.12 -m pip install -r requirements.txt), make sure your JAVA_HOME and CLASSPATH env variables are set, and then run the code python3.12

Welcome! We’re about to build something useful, a volunteer-matching platform that connects skilled medical professionals with health organizations that need them. It’s the kind of system you’d see powering real health events, from blood drives to vaccination clinics. By the time we’re done, you’ll understand how to architect and deploy a complete full-stack application that handles real-world complexity, including matching qualified people to opportunities, managing permissions across different user roles, and keeping everything secure. The Stack: Technologies That Work Together We’re using a carefully selected tech stack that mirrors what you’ll find in production environments: Spring Boot & Thymeleaf handles the business rules, data orchestration, and renders dynamic HTML templates on the server side. GridDB (cloud-hosted NoSQL datastore) stores volunteer profiles, opportunities, and applications. Each technology serves a specific purpose, and together they create a seamless user experience backed by robust backend logic. Learning Roadmap We’ll move from foundation to mastery: Setup & Architecture: We’ll start by understanding the three-layer system design, laying out your Maven project structure, and configuring Spring Boot for success. Core Features: Next, we’ll implement the data model (entities, relationships, indexing) and set up GridDB integration. User Interface & Experience: Then we’ll create server-rendered Thymeleaf templates for browsing opportunities, applying for roles, and managing skills. You’ll see how server-side rendering keeps everything simple. Security: We’ll add Spring Security authentication, implement role-based access control, ensuring organizers see different screens than volunteers, and ensuring data stays protected. Real-World Patterns: Finally, integrate real-time slot updates. By completing this tutorial, you’ll understand how to architect a full-stack Java application from database to user interface. More importantly, you’ll have a complete, deployable system you can adapt to other matching problems. Let’s build something real. Project Setup Here’s how we’ll set it up: Navigate to start.spring.io Configure your project: Project: Maven Language: Java Spring Boot: 3.5.x (latest stable version) Group: com.example Artifact: springboot-volunteermatching Java Version: 21 Add the following dependencies: Spring Web Thymeleaf Spring Security Click Generate to download a ZIP file with our project structure Once you’ve downloaded and extracted the project, import it into your IDE. Next, we will create the package structure by grouping the classes based on their respective entities, e.g., a package organization contains the controller, service, DTO, etc. volunteer-matching/ ├── pom.xml ├── src/main/java/com/volunteermatching/ │ ├── config/ (RestClient config) │ ├── griddb/ │ ├── griddbwebapi/ │ ├── opportunity/ │ ├── opportunity_requirement/ │ ├── organization/ │ ├── organization_member/ │ ├── registration/ │ └── security/ (Auth filters, RBAC) │ ├── skill/ │ ├── user/ │ ├── volunteer_skill/ └── src/main/resources/ ├── templates/ (Thymeleaf templates) └── application.properties (Configuration) Connecting to the GridDB Cloud Configure the credentials for connecting to the GridDB Cloud through HTTP. Add the following to application.properties: # GridDB Configuration griddbcloud.base-url=https://cloud5197.griddb.com:443/griddb/v2/gs_cluster griddbcloud.auth-token=TTAxxxxxxx Next, create a bean of org.springframework.web.client.RestClient that provides a fluent, builder-based API for sending synchronous and asynchronous HTTP requests with cleaner syntax and improved readability. @Configuration public class RestClientConfig { final Logger LOGGER = LoggerFactory.getLogger(RestClientConfig.class); @Bean(“GridDbRestClient”) public RestClient gridDbRestClient( @NonNull @Value(“${griddbcloud.base-url}”) final String baseUrl, @NonNull @Value(“${griddbcloud.auth-token}”) final String authToken) { return RestClient.builder() .baseUrl(baseUrl) .defaultHeader(HttpHeaders.AUTHORIZATION, “Basic ” + authToken) .defaultHeader(HttpHeaders.CONTENT_TYPE, MediaType.APPLICATION_JSON_VALUE) .defaultHeader(HttpHeaders.ACCEPT, MediaType.APPLICATION_JSON_VALUE) .defaultStatusHandler( status -> status.is4xxClientError() || status.is5xxServerError(), (request, response) -> { String responseBody = getResponseBody(response); LOGGER.error(“GridDB API error: status={} body={}”, response.getStatusCode(), responseBody); if (response.getStatusCode().value() == 403) { LOGGER.error(“Access forbidden – please check your auth token and permissions.”); throw new ForbiddenGridDbConnectionException(“Access forbidden to GridDB Cloud API.”); } throw new GridDbException(“GridDB API error: “, response.getStatusCode(), responseBody); }) .requestInterceptor((request, body, execution) -> { final long begin = System.currentTimeMillis(); ClientHttpResponse response = execution.execute(request, body); logDuration(request, body, begin, response); return response; }) .build(); } } @Bean(“GridDbRestClient”): register this client as a Spring bean so we can inject it anywhere with @Qualifier(“GridDbRestClient”) final RestClient restClient. .baseUrl(baseUrl): set the common base URL for all requests. .defaultHeader(…): adds a header that will be sent with every request. .defaultStatusHandler(…): when the API return an error (4xx or 5xx status code), log the error status. If the status is 403, throws a custom ForbiddenGridDbConnectionException. For any other error, it throws a general GridDbException. .requestInterceptor(: log how long the request took for debugging performance. Next, create a helper that will be used by each service class to talk to the GridDB Cloud over the internet using HTTP requests. It wraps a pre-configured RestClient and provides easy-to-use methods for common database operations. All the complicated stuff (URLs, headers, error handling) is hidden inside this class. @Component public class GridDbClient { private final RestClient restClient; public GridDbClient(@Qualifier(“GridDbRestClient”) final RestClient restClient) { this.restClient = restClient; } public void createContainer(final GridDbContainerDefinition containerDefinition) { try { restClient .post() .uri(“/containers”) .body(containerDefinition) .retrieve() .toBodilessEntity(); } catch (Exception e) { throw new GridDbException(“Failed to create container”, HttpStatusCode.valueOf(500), e.getMessage(), e); } } public void registerRows(String containerName, Object body) { try { ResponseEntity result = restClient .put() .uri(“/containers/” + containerName + “/rows”) .body(body) .retrieve() .toEntity(String.class); } catch (Exception e) { throw new GridDbException(“Failed to execute PUT request”, HttpStatusCode.valueOf(500), e.getMessage(), e); } } public AcquireRowsResponse acquireRows(String containerName, AcquireRowsRequest requestBody) { try { ResponseEntity responseEntity = restClient .post() .uri(“/containers/” + containerName + “/rows”) .body(requestBody) .retrieve() .toEntity(AcquireRowsResponse.class); return responseEntity.getBody(); } catch (Exception e) { throw new GridDbException(“Failed to execute GET request”, HttpStatusCode.valueOf(500), e.getMessage(), e); } } public SQLSelectResponse[] select(List sqlStmts) { try { ResponseEntity responseEntity = restClient .post() .uri(“/sql/dml/query”) .body(sqlStmts) .retrieve() .toEntity(SQLSelectResponse[].class); return responseEntity.getBody(); } catch (Exception e) { throw new GridDbException(“Failed to execute /sql/dml/query”, HttpStatusCode.valueOf(500), e.getMessage(), e); } } public SqlExecutionResult[] executeSqlDDL(List sqlStmts) { try { ResponseEntity responseEntity = restClient.post().uri(“/sql/ddl”).body(sqlStmts).retrieve().toEntity(SqlExecutionResult[].class); return responseEntity.getBody(); } catch (Exception e) { throw new GridDbException(“Failed to execute SQL DDL”, HttpStatusCode.valueOf(500), e.getMessage(), e); } } public SQLUpdateResponse[] executeSQLUpdate(List sqlStmts) { try { ResponseEntity responseEntity = restClient .post() .uri(“/sql/dml/update”) .body(sqlStmts) .retrieve() .toEntity(SQLUpdateResponse[].class); return responseEntity.getBody(); } catch (Exception e) { throw new GridDbException(“Failed to execute /sql/dml/update”, HttpStatusCode.valueOf(500), e.getMessage(), e); } } } The constructor takes a RestClient that was named GridDbRestClient. The @Qualifier makes sure we get the correct one. Every method follows the same safe structure: Try to send an HTTP request using restClient. If something goes wrong (network issue, wrong data, server error), catch the exception. Data Model using DTOs Now, let’s create the Data Transfer Objects (DTOs). DTOs are simple classes that carry information from one part of the app to another, for example, from the database to the screen. In this project, the DTOs represent important things like users, skills, organizations, and volunteer events. Each DTO has its own fields to hold the data. Each DTO matches the structure of rows inside one GridDB container. UserDTO: represents a user in the system, such as a volunteer or an organization admin. It’s used to create, update, or display user information. public class UserDTO { @Size(max = 255) @UserIdValid private String id; @NotNull @Size(max = 255) @UserEmailUnique private String email; @NotNull @Size(max = 255) private String fullName; @NotNull private UserRole role; // Setter and Getter } SkillDTO: represents a skill that volunteers can have, such as “First Aid” or “Paramedic.” It’s used to manage the list of available skills. public class SkillDTO { @Size(max = 255) private String id; @NotNull @Size(max = 255) @SkillNameUnique private String name; public SkillDTO() {} public SkillDTO(String id, String name) { this.id = id; this.name = name; } // Setter and Getter } VolunteerSkillDTO: links a user (volunteer) to a specific skill. It includes details like when the skill expires and its verification status. It’s useful for tracking what skills a volunteer has and their validity. OrganizationDTO: represents an organization that creates volunteer opportunities. It’s used to manage organization details. OrganizationMemberDTO: links a user to an organization, specifying their role within it (e.g., member or admin). It’s used to manage who belongs to which organization. OpportunityDTO: represents a volunteer opportunity, like an event that needs volunteers. It’s used to create and display opportunities. OpportunityRequirementDTO: specifies the skills required for a volunteer opportunity. It links an opportunity to skills and indicates if a skill is mandatory. RegistrationDTO: represents a volunteer’s registration for an opportunity. It tracks who signed up and the status of their registration. Service Layer and Business Logic Next, we implement the service layer. The services will utilize these DTOs to handle business logic, communicate with GridDB Cloud through our client, and prepare data for the controllers. The Service class does not use any repository layer like JPA. Instead, it directly connects to GridDB, which is a database, using a GridDbClient. The Service class implements the interface, which means it must provide methods like findAll() to get all rows and get() to find one by ID, create to add a new row, and others. When fetching data, it sends requests to GridDB to get rows, then maps those rows into DTO objects. For saving or updating, it builds a string in JSON format with data and sends it to GridDB Cloud. It also generates unique IDs using TsidCreator and handles date times carefully by parsing and formatting them. @Service public class RegistrationGridDBService implements RegistrationService { private final Logger log = LoggerFactory.getLogger(getClass()); private final GridDbClient gridDbClient; private final String TBL_NAME = “VoMaRegistrations”; public RegistrationGridDBService(final GridDbClient gridDbClient) { this.gridDbClient = gridDbClient; } public void createTable() { List<GridDbColumn> columns = List.of( new GridDbColumn(“id”, “STRING”, Set.of(“TREE”)), new GridDbColumn(“userId”, “STRING”, Set.of(“TREE”)), new GridDbColumn(“opportunityId”, “STRING”, Set.of(“TREE”)), new GridDbColumn(“status”, “STRING”), new GridDbColumn(“registrationTime”, “TIMESTAMP”)); GridDbContainerDefinition containerDefinition = GridDbContainerDefinition.build(TBL_NAME, columns); this.gridDbClient.createContainer(containerDefinition); } @Override public List<RegistrationDTO> findAll() { AcquireRowsRequest requestBody = AcquireRowsRequest.builder().limit(50L).sort(“id ASC”).build(); AcquireRowsResponse response = this.gridDbClient.acquireRows(TBL_NAME, requestBody); if (response == null || response.getRows() == null) { log.error(“Failed to acquire rows from GridDB”); return List.of(); } return response.getRows().stream() .map(row -> { return extractRowToDTO(row); }) .collect(Collectors.toList()); } private RegistrationDTO extractRowToDTO(List<Object> row) { RegistrationDTO dto = new RegistrationDTO(); dto.setId((String) row.get(0)); dto.setUserId((String) row.get(1)); dto.setOpportunityId((String) row.get(2)); try { dto.setStatus(RegistrationStatus.valueOf(row.get(3).toString())); } catch (Exception e) { dto.setStatus(null); } try { dto.setRegistrationTime(DateTimeUtil.parseToLocalDateTime(row.get(4).toString())); } catch (Exception e) { dto.setRegistrationTime(null); } return dto; } @Override public RegistrationDTO get(final String id) { AcquireRowsRequest requestBody = AcquireRowsRequest.builder() .limit(1L) .condition(“id == ‘” + id + “‘”) .build(); AcquireRowsResponse response = this.gridDbClient.acquireRows(TBL_NAME, requestBody); if (response == null || response.getRows() == null) { log.error(“Failed to acquire rows from GridDB”); throw new NotFoundException(“Registration not found with id: ” + id); } return response.getRows().stream() .findFirst() .map(row -> { return extractRowToDTO(row); }) .orElseThrow(() -> new NotFoundException(“Registration not found with id: ” + id)); } public String nextId() { return TsidCreator.getTsid().format(“reg_%s”); } @Override public String register(String userId, String opportunityId) { RegistrationDTO registrationDTO = new RegistrationDTO(); registrationDTO.setUserId(userId); registrationDTO.setOpportunityId(opportunityId); registrationDTO.setStatus(RegistrationStatus.PENDING); registrationDTO.setRegistrationTime(LocalDateTime.now()); return create(registrationDTO); } } Implement the validation We create a dedicated Service class for validating volunteer registration requests against opportunity requirements. Some benefit from this approach: Hides the complexity. If the rules change later (e.g., “User needs 2 out of 3 skills”), we only change that one place Business validation logic isolated from HTTP concern Validation service can be used by REST APIs or other controllers Service can be unit tested independently Clear, focused exception handling with rich context @Service public class RegistrationValidationService { private final Logger log = LoggerFactory.getLogger(getClass()); private final RegistrationService registrationService; private final OpportunityService opportunityService; private final OpportunityRequirementService opportunityRequirementService; private final VolunteerSkillService volunteerSkillService; private final SkillService skillService; public RegistrationValidationService( final RegistrationService registrationService, final OpportunityService opportunityService, final OpportunityRequirementService opportunityRequirementService, final VolunteerSkillService volunteerSkillService, final SkillService skillService) { this.registrationService = registrationService; this.opportunityService = opportunityService; this.opportunityRequirementService = opportunityRequirementService; this.volunteerSkillService = volunteerSkillService; this.skillService = skillService; } public void validateRegistration(final String userId, final String opportunityId) { // Check 1: User not already registered validateNotAlreadyRegistered(userId, opportunityId); // Check 2: Opportunity has available slots validateSlotsAvailable(opportunityId); // Check 3: User has mandatory skills validateMandatorySkills(userId, opportunityId); } private void validateNotAlreadyRegistered(final String userId, final String opportunityId) { Optional existingReg = registrationService.getByUserIdAndOpportunityId(userId, opportunityId); if (existingReg.isPresent()) { throw new AlreadyRegisteredException(userId, opportunityId); } } private void validateSlotsAvailable(final String opportunityId) { OpportunityDTO opportunity = opportunityService.get(opportunityId); Long registeredCount = registrationService.countByOpportunityId(opportunityId); if (registeredCount >= opportunity.getSlotsTotal()) { throw new OpportunitySlotsFullException(opportunityId, opportunity.getSlotsTotal(), registeredCount); } } private void validateMandatorySkills(final String userId, final String opportunityId) { List userSkills = volunteerSkillService.findAllByUserId(userId); List opportunityRequirements = opportunityRequirementService.findAllByOpportunityId(opportunityId); for (OpportunityRequirementDTO requirement : opportunityRequirements) { if (!requirement.getIsMandatory()) { continue; } boolean hasSkill = userSkills.stream() .anyMatch(userSkill -> userSkill.getSkillId().equals(requirement.getSkillId())); if (!hasSkill) { SkillDTO skill = skillService.get(requirement.getSkillId()); String skillName = skill != null ? skill.getName() : “Unknown Skill”; throw new MissingMandatorySkillException(userId, opportunityId, requirement.getSkillId(), skillName); } } } } This service depends on 5 collaborating services (Opportunity, OpportunityRequirement, VolunteerSkill, Skill, Registration) and throws custom exceptions for each validation failure, allowing callers to handle different error scenarios appropriately (e.g., different error messages, logging, etc). HTTP Layer Now, we need a class that handles all incoming web requests, processes user input, and sends back responses. It’s the bridge between the user’s browser and the application’s code logic. @Controller @RequestMapping(“/opportunities”) public class OpportunityController { private final Logger log = LoggerFactory.getLogger(getClass()); private final OpportunityService opportunityService; private final RegistrationService registrationService; private final RegistrationValidationService registrationValidationService; private final UserService userService; private final OpportunityRequirementService opportunityRequirementService; private final SkillService skillService; public OpportunityController( final OpportunityService opportunityService, final RegistrationService registrationService, final RegistrationValidationService registrationValidationService, final UserService userService, final OpportunityRequirementService opportunityRequirementService, final SkillService skillService) { this.opportunityService = opportunityService; this.registrationService = registrationService; this.registrationValidationService = registrationValidationService; this.userService = userService; this.opportunityRequirementService = opportunityRequirementService; this.skillService = skillService; } @GetMapping public String list(final Model model, @AuthenticationPrincipal final CustomUserDetails userDetails) { List allOpportunities = new ArrayList(); UserDTO user = userDetails != null ? userService.getOneByEmail(userDetails.getUsername()).orElse(null) : null; if (userDetails != null && userDetails.getOrganizations() != null && !userDetails.getOrganizations().isEmpty()) { OrganizationDTO org = userDetails.getOrganizations().get(0); model.addAttribute(“organization”, org); allOpportunities = opportunityService.findAllByOrgId(org.getId()); } else { model.addAttribute(“organization”, null); allOpportunities = opportunityService.findAll(); } List opportunities = extractOpportunities(allOpportunities, user); model.addAttribute(“opportunities”, opportunities); return “opportunity/list”; } @GetMapping(“/add”) @PreAuthorize(SecurityExpressions.ORGANIZER_ONLY) public String add( @ModelAttribute(“opportunity”) final OpportunityDTO opportunityDTO, final Model model, @AuthenticationPrincipal final CustomUserDetails userDetails) { if (userDetails != null && userDetails.getOrganizations() != null && !userDetails.getOrganizations().isEmpty()) { OrganizationDTO org = userDetails.getOrganizations().get(0); opportunityDTO.setOrgId(org.getId()); } opportunityDTO.setId(opportunityService.nextId()); return “opportunity/add”; } @PostMapping(“/add”) @PreAuthorize(SecurityExpressions.ORGANIZER_ONLY) public String add( @ModelAttribute(“opportunity”) @Valid final OpportunityDTO opportunityDTO, final BindingResult bindingResult, final RedirectAttributes redirectAttributes) { if (bindingResult.hasErrors()) { return “opportunity/add”; } opportunityService.create(opportunityDTO); redirectAttributes.addFlashAttribute(WebUtils.MSG_SUCCESS, WebUtils.getMessage(“opportunity.create.success”)); return “redirect:/opportunities”; } @GetMapping(“/edit/{id}”) @PreAuthorize(SecurityExpressions.ORGANIZER_ONLY) public String edit(@PathVariable(name = “id”) final String id, final Model model) { model.addAttribute(“opportunity”, opportunityService.get(id)); return “opportunity/edit”; } @PostMapping(“/edit/{id}”) @PreAuthorize(SecurityExpressions.ORGANIZER_ONLY) public String edit( @PathVariable(name = “id”) final String id, @ModelAttribute(“opportunity”) @Valid final OpportunityDTO opportunityDTO, final BindingResult bindingResult, final RedirectAttributes redirectAttributes) { if (bindingResult.hasErrors()) { return “opportunity/edit”; } opportunityService.update(id, opportunityDTO); redirectAttributes.addFlashAttribute(WebUtils.MSG_SUCCESS, WebUtils.getMessage(“opportunity.update.success”)); return “redirect:/opportunities”; } @PostMapping(“/{id}/registrations”) public String registrations( @PathVariable(name = “id”) final String opportunityId, final RedirectAttributes redirectAttributes, @AuthenticationPrincipal final UserDetails userDetails) { UserDTO user = userService .getOneByEmail(userDetails.getUsername()) .orElseThrow(() -> new UsernameNotFoundException(“User not found”)); try { // Validate registration using the validation service registrationValidationService.validateRegistration(user.getId(), opportunityId); // If validation passes, proceed with registration OpportunityDTO opportunityDTO = opportunityService.get(opportunityId); registrationService.register(user.getId(), opportunityId); log.debug( “Registration Successful – user: {}, opportunity: {}”, user.getFullName(), opportunityDTO.getTitle()); redirectAttributes.addFlashAttribute( WebUtils.MSG_INFO, WebUtils.getMessage(“opportunity.registrations.success”)); return “redirect:/opportunities/” + opportunityId; } catch (AlreadyRegisteredException e) { redirectAttributes.addFlashAttribute( WebUtils.MSG_ERROR, WebUtils.getMessage(“opportunity.registrations.already_registered”)); return “redirect:/opportunities/” + opportunityId; } catch (OpportunitySlotsFullException e) { redirectAttributes.addFlashAttribute( WebUtils.MSG_ERROR, WebUtils.getMessage(“opportunity.registrations.full”)); return “redirect:/opportunities/” + opportunityId; } catch (MissingMandatorySkillException e) { redirectAttributes.addFlashAttribute( WebUtils.MSG_ERROR, WebUtils.getMessage(“opportunity.registrations.missing_skill”, e.getSkillName())); return “redirect:/opportunities/” + opportunityId; } } } The OpportunityController.java: Doesn’t do the work itself; it delegates to specialized services. This keeps code organized and reusable. Manages everything related to /opportunities URLs, for example, listing volunteer opportunities. Receives services it needs using constructor injection. @PreAuthorize ensures only authorized users perform actions Validate registration using registrationValidationService. If validation fails, catches specific exceptions and shows error messages. Clean Controller: focus on orchestration only User Interface Preview Listing opportunity page: Register page: Conclusion Building a volunteer-matching web for a health event is a practical project that trains core skills: Spring Boot service design, server-rendered Thymeleaf UI, Cloud NoSQL integration, and RBAC. Feel free to add more feature like email notifications or calendar integration. Keep building, keep

Modern Ecological Research is largely data-driven, with actionable insights and decisions made using massive, complex datasets.

With GridDB Cloud now having the ability to connect to your code through what is known as non-webapi, aka through its native NoSQL interface (Java, Python, etc), we can now explore connecting to various Azure Services through the virtual network peering. Because our GridDB Cloud instance is connected to anything connected to our Virtual Network thanks to the peering connection, anything that allows connection to a virtual network can now directly communicate with GridDB Cloud. Note: this is only available for the GridDB Cloud offered at Microsoft Azure Marketplace; the GridDB Cloud Free Plan from the Toshiba page does not support VNET peering. Source code found on the griddbnet github: $ git clone https://github.com/griddbnet/Blogs.git –branch azure_connected_services Introduction In this article, we will explore connecting our GridDB Cloud instance to Azure’s IoT Hub to store telemetry data. We have previously made a web course on how to set up the Azure IoT Hub with GridDB Cloud but through the Web API. That can be found here: https://www.udemy.com/course/griddb-and-azure-iot-hub/?srsltid=AfmBOopFTwFHI7OvQOEXt4P_cWxuo3NaJ9XkbNDHHWX5Tgky4QZzJlD3. You can also learn about how to connect your GridDB Cloud instance to your Azure virtual network through the v-net peering here: https://griddb.net/en/blog/griddb-cloud-v3-1-how-to-use-the-native-apis-with-azures-vnet-peering/. As a bonus, we have also made a blog on how to connect your local environment to your cloud-hosted GridDB instance through a VPN to be able to just use your local programming environment; blog here: GridDB Cloud v3.1 – How to Use the Native APIs with Azure’s VNET Peering So for this one, let’s get started with our IoT Hub implementation. We will be setting up an IoT Hub with any number of devices which will trigger a GridDB write whenever telemetry data is detected. We will then also set up another Azure Function which will run on a simple timer (every 1 hour) that will run a simple aggregation of the IoT Sensor data to keep the data tidy and data analysis. There is also source code for setting up a Kafka connection through a timer which will read all data from within the past 5 minutes and stream that data out through Kafka, but we won’t discuss it here. Azure’s Cloud Infrastructure Let’s talk briefly about Azure’s services that we will need to master and use to get all of this running. First, the IoT Hub Azure’s IoT Hub You can read about what the IoT Hub does here: https://learn.microsoft.com/en-us/azure/iot-hub/. The purpose of it is to make it easy to manage a fleet of IoT sensors which exist in the real world, emitting data at intervals which needs to be stored and analyzed. For this article, we will simply create one virtual device of which we will push data through a python script provided by Microsoft (source code here: https://github.com/Azure/azure-iot-sdk-python). You can learn how to create the IoT Hub and how to deploy code/functions through an older blog: https://griddb.net/en/blog/iot-hub-azure-griddb-cloud/. Because this information is here, we will continue on assuming you have already built the IoT Hub in your Azure and we will just discuss the source code needed to get our data to GridDB Cloud through the native Java API. Azure Functions The real glue of this set up is our Azure Functions. For this article, I created an Azure Function Standard Plan. From there, I connected the standard plan to the virtual network which is already peer-connected to our GridDB Cloud instance. With this simple step, all of our Azure Functions which we deploy and use on this app service plan will already be able to communicate with GridDB Cloud seamlessly. And for our Azure Function that combines with the IoT Hub to detect events and use that data to run some code, we will use a specific function binding in our java code: @EventHubTrigger(name = “message”, eventHubName = “events”, connection = “IotHubConnectionString”, consumerGroup = “myfuncapp-cg”, cardinality = Cardinality.ONE) String message,. In this case, we are telling our Azure Function that whenever an event occurs in our IoT Hub (as defined in the IoTHubConnectionString), we want to run the following code. The magic is all contained within Azure Functions and that IoTHubConnectionString, which is gathered in the IoT Hub called: primary connection string. So in your Azure Function, when you create it, you should head to Settings -> Environment Variables. And set the IoTHubConnectionString as well as your GridDB Cloud credentials. If you are using VSCode, you can set these vars in your local.settings.json file created when you select Azure Functions Create Function App (as mentioned in the blog linked above) and then do Azure Functions: Deploy Local Settings. IoT Hub Event Triggering Now let’s look at the actual source code that pushes data to GridDB Cloud. Java Source Code for Pushing Event Telemetry Data Our goal here is to log all of our sensors’ within the IoT Hub’s data into persistent storage (aka GridDB Cloud). To do this, we use Azure Functions and their special bindings/triggers. In this case, we want to detect whenever our IoT Hub’s sensors receive telemetry data, which will then fire off our java code which will forge a connection to GridDB through its NoSQL interface and simply write that row of data. Here is the main method in Java: public class IotTelemetryHandler { private static GridDB griddb = null; private static final ObjectMapper MAPPER = new ObjectMapper(); @FunctionName(“IoTHubTrigger”) public void run( @EventHubTrigger(name = “message”, eventHubName = “events”, connection = “IotHubConnectionString”, consumerGroup = “myfuncapp-cg”, cardinality = Cardinality.ONE) String message, @BindingName(“SystemProperties”) Map properties, final ExecutionContext context) { TelemetryData data; try { data = MAPPER.readValue(message, TelemetryData.class); } catch (Exception e) { context.getLogger().severe(“Failed to parse JSON message: ” + e.getMessage()); context.getLogger().severe(“Raw Message: ” + message); return; } try { context.getLogger().info(“Java Event Hub trigger processed a message: ” + message); String deviceId = properties.get(“iothub-connection-device-id”).toString(); String eventTimeIso = properties.get(“iothub-enqueuedtime”).toString(); Instant enqueuedInstant = Instant.parse(eventTimeIso); long eventTimeMillis = enqueuedInstant.toEpochMilli(); Timestamp dbTimestamp = new Timestamp(eventTimeMillis); data.ts = dbTimestamp; context.getLogger().info(“Data received from Device: ” + deviceId); griddb = new GridDB(); String containerName = “telemetryData”; griddb.CreateContainer(containerName); griddb.WriteToContainer(containerName, data); context.getLogger().info(“Successfully saved to DB.”); } catch (Throwable t) { context.getLogger().severe(“CRITICAL: Function execution failed with exception:”); context.getLogger().severe(t.toString()); // throw new RuntimeException(“GridDB processing failed”, t); } } } The Java code itself is vanilla, it’s what the Azure Functions bindings do that is the real magic. As explained above, using the IoT Hub connection string directs what events are being polled to grab those values and eventually be written to GridDB. Data Aggregation So now we’ve got thousands of rows of data from our sensors inside of our DB. A typical workflow in this scenario might be a separate service which runs aggregations on a timer to help manage the data or keep around an easy-to-reference snapshot of the data in your sensors. Python is a popular vehicle for running data-science-y type operations, so let’s set up the GridDB Python client and let’s run a simple average function every hour. Python Client While using Java in the Azure function works out of the box (as shown above), the python client has some requirements for installing and being run. Specifically, we need to actually have Java installed, as well as some special-built java jar files. The easiest way to get this sort of environment set up in an Azure Function is to use Docker. With Docker, we can include all of the libraries and instructions needed to install the python client and deploy the container with all source code as is. The Python script will then run on a timer every 1 hour and write to a new GridDB Cloud table which will keep track of the hourly aggregates of each data point. Dockerize Python Client To dockerize our python client, we need to convert the instructions on how to install the python client into docker instructions, as well as copy the source code and credentials. Here is what the Dockerfile looks like: FROM mcr.microsoft.com/azure-functions/python:4-python3.12 ENV AzureWebJobsScriptRoot=/home/site/wwwroot \ AzureFunctionsJobHost__Logging__Console__IsEnabled=true ENV PYTHONBUFFERED=1 ENV GRIDDB_NOTIFICATION_PROVIDER=”[notification_provider]” ENV GRIDDB_CLUSTER_NAME=”[clustername]” ENV GRIDDB_USERNAME=”[griddb-user]” ENV GRIDDB_PASSWORD=”[password]” ENV GRIDDB_DATABASE=”[database]” WORKDIR /home/site/wwwroot RUN apt-get update && \ apt-get install -y default-jdk git maven && \ rm -rf /var/lib/apt/lists/* ENV JAVA_HOME=/usr/lib/jvm/default-java WORKDIR /tmp RUN git clone https://github.com/griddb/python_client.git && \ cd python_client/java && \ mvn install RUN mkdir -p /home/site/wwwroot/lib && \ mv /tmp/python_client/java/target/gridstore-arrow-5.8.0.jar /home/site/wwwroot/lib/gridstore-arrow.jar WORKDIR /tmp/python_client/python RUN python3.12 -m pip install . WORKDIR /home/site/wwwroot COPY ./lib/gridstore.jar /home/site/wwwroot/lib/ COPY ./lib/arrow-memory-netty.jar /home/site/wwwroot/lib/ COPY ./lib/gridstore-jdbc.jar /home/site/wwwroot/lib/ COPY *.py . COPY requirements.txt . RUN python3.12 -m pip install -r requirements.txt ENV CLASSPATH=/home/site/wwwroot/lib/gridstore.jar:/home/site/wwwroot/lib/gridstore-jdbc.jar:/home/site/wwwroot/lib/gridstore-arrow.jar:/home/site/wwwroot/lib/arrow-memory-netty.jar Once in place, you do the normal docker build, docker tag, docker push. But there is one caveat! Azure Container Registry Though not necessary, setting up your own Azure Container Registry(acr) (think Dockerhub) to host your images makes life a whole lot simpler for deploying your code to Azure Functions. So in my case, I set up an acr, and then pushed my built images into that repository. Once there, I went to the deployment center of my new python Azure Function and selected my container’s name etc. From there, it will deploy and run based on your stipulations. Cool! Python Code to do Data Aggregation Similar to the Java implementation above, we will use the Azure Function bindings/trigger on the python code to use a cron-style layout for the timer. Under the hood, the Azure Function infrastructure will run the function every 1 hour based on our setting. The code itself is also vanilla: we will query data from our table written to above from the past 1 hour, find the averages, and then write that data back to GridDB Cloud on another table. Note that since this function solely relies on the Azure Function timer and GridDB, there is no need for special IoT Hub Connection String-type connection strings to grab. Here is the main python that Azure will run when the time is right: import logging import azure.functions as func import griddb_python as griddb from griddb_connector import GridDB from griddb_sql import GridDBJdbc from datetime import datetime import pyarrow as pa import pandas as pd import sys app = func.FunctionApp() @app.timer_trigger(schedule=”0 0 * * * *”, arg_name=”myTimer”, run_on_startup=True, use_monitor=False) def aggregations(myTimer: func.TimerRequest) -> None: if myTimer.past_due: logging.info(‘The timer is past due!’) logging.info(‘Python timer trigger function executed.’) nosql = None store = None ra = None griddb_jdbc = None try: print(“Attempting to connect to GridDB…”) nosql = GridDB() store = nosql.get_store() ra = griddb.RootAllocator(sys.maxsize) if not store: print(“Connection failed. Exiting script.”) sys.exit(1) griddb_jdbc = GridDBJdbc() if griddb_jdbc.conn: averages = griddb_jdbc.calculate_avg() nosql.pushAvg(averages) print(“\nScript finished successfully.”) except Exception as e: print(f”A critical error occurred in main: {e}”) finally: print(“Script execution complete.”) The rest of the code isn’t very interesting but let’s take a brief look. Here we are querying the last 1 hour of data and calculating the averages: def calculate_avg(self): try: curs = self.conn.cursor() queryStr = ‘SELECT temperature, pressure, humidity FROM telemetryData WHERE ts BETWEEN TIMESTAMP_ADD(HOUR, NOW(), -1) AND NOW();’ curs.execute(queryStr) if curs.description is None: print(“Query returned no results or failed.”) return None column_names = [desc[0] for desc in curs.description] all_rows = curs.fetchall() if not all_rows: print(“No data found for the query range.”) return None results = {name.lower(): [] for name in column_names} for row in all_rows: for i, name in enumerate(column_names): results[name.lower()].append(row[i]) averages = { ‘temperature’: statistics.mean(results[‘temperature’]), ‘humidity’: statistics.mean(results[‘humidity’]), ‘pressure’: statistics.mean(results[‘pressure’]) } return averages Note: for this function, we created the table beforehand (not in the Python code). Bonus Azure Kafka Event Hub We also set up an Event Hub function to query the last 5 minutes of telemetry data and stream it through Kafka. We ended up leaving this as dangling, but I’ve included it here because the source code already exists. It also uses a timer trigger and relies solely on the connection to GridDB Cloud. Azure’s Event Hub handles all of the complicated Kafka stuff under the hood, we just needed to return the data to be pushed through Kafka. Here is the source code: package net.griddb; import com.microsoft.azure.functions.ExecutionContext; import com.microsoft.azure.functions.annotation.EventHubOutput; import com.microsoft.azure.functions.annotation.FunctionName; import com.microsoft.azure.functions.annotation.TimerTrigger; import java.sql.SQLException; import java.util.ArrayList; import java.util.List; import java.util.logging.Level; public class GridDBPublisher { @FunctionName(“GridDBPublisher”) @EventHubOutput(name = “outputEvent”, eventHubName = “griddb-telemetry”,

GridDB Cloud version 3.1 is now out and there are some new features we would like to showcase, namely the main two: the ability to connect to your Cloud instance via an Azure Virtual Network (vnet) peering connection, and then also for a new way to authenticate your Web API Requests with web tokens (bearer tokens). In this article, we will first go through setting up a vnet peering connection to your GridDB Cloud Pay as you go plan. Then, we will briefly cover the sample code attached with this article. And then lastly, we will go over the new authentication method for the Web API and an example of implementing it into your app/workflow. Vnet Peering What exactly is a vnet peering connection? Here is the strict definition from the Azure docs: “Azure Virtual Network peering enables you to seamlessly connect two or more virtual networks in Azure, making them appear as one for connectivity purposes. This powerful feature allows you to create secure, high-performance connections between virtual networks while keeping all traffic on Microsoft’s private backbone infrastructure, eliminating the need for public internet routing.” In our case, we want to forge a secure connection between our virtual machine and the GridDB Cloud instance. With this in place, we can connect to GridDB Cloud via a normal java/python source code and use it outside of the context of the Web API. How to Set Up a Virtual Network Peering Connection Before you start on the GridDB Cloud side, you will first need to create some resources on the Microsoft Azure side. On Azure, create a Virtual Network (VNet). Azure Resources to Create Virtual Network Peering Connection You will also need to eventually set up a virtual machine that is on the same virtual network that you just created (which will be the one you link with GridDB Cloud below). With these two resources created, let’s move on to the GridDB Cloud side. > GridDB Cloud — Forging Virtual Network Peering Connection For this part of the process, you can read step-by-step instructions here in the official docs: https://www.toshiba-sol.co.jp/pro/griddbcloud/docs-en/v3_1/cloud_quickstart_guide_html/GridDB_Cloud_QuickStartGuide.html#connection-settings-for-vnet. From the network tab, click ‘Create peering connection’ and you’ll see something like this pop up. Here’s a summary of the steps needed to forge the vnet peering connection: From the navigation menu, select Network Access and then click the CREATE PEERING CONNECTION button. When the cloud provider selection dialog appears, leave the settings as-is and click NEXT. On the VNet Settings screen, enter your VNet information: Subscription ID Tenant ID Resource group name VNet name Click NEXT Run the command provided in the dialog to establish the VNet peering. You can run this in either: Azure Cloud Shell (recommended) Azure command-line interface (Azure CLI) After running the command, go to the VNet peering list screen and verify that the Status of the connection is Connected. All of these steps are also plainly laid out in the GridDB Cloud UI — it should be a fairly simple process through and through. Using the Virtual Network Peering Connection Once your connection is forged, you should be able to connect to the GridDB Cloud instance using the notification provider address provided in your GridDB Cloud UI Dashboard. If you want to do a very quick check before trying to run sample code, you can download the json file and use a third-party network tool like telnet to see if the machine is reachable. For example, download the json file, find the transaction address and port number, and then run telnet: $ wget [notifification-provider-url] $ cat mfcloud2102.json { “cluster”: {“address”:”172.26.30.69″, “port”:10010}, “sync”: {“address”:”172.26.30.69″, “port”:10020}, “system”: {“address”:”172.26.30.69″, “port”:10040}, “transaction”: {“address”:”172.26.30.69″, “port”:10001}, “sql”: {“address”:”172.26.30.69″, “port”:20001} }, $ telnet 172.26.30.69 10001 If a connection is made, everything is working properly. Sample Code And now you can run the sample code included in this repo. There is Java code for both NoSQL and SQL interfaces as well as Python code. To run the java code, you need to install java and maven. The sample code will create tables and read those tables, but also expects for you to ingest a csv dataset from Kaggle: https://www.kaggle.com/code/chaozhuang/iot-telemetry-sensor-data-analysis. We have included the csv file with this repo. Ingesting IoT Telemetry Data To ingest the dataset, first navigate to the griddbCloudDataImport dir inside of this repository. Within this dir, you will find that the GridDB Cloud Import tool is already installed. To use, open up the .sh file and edit lines 34-36 to include your credentials. Next, you must run the python file within this directory to clean up the data, namely changing the timestamp column to better adhere to the Web API’s standard, and also to separate out the csv into 3 distinct csv files, one for each device found in the dataset. Here is the line of code that will transform the ts col into something the GridDB Web API likes. df[‘ts’] = pd.to_datetime(df[‘ts’], unit=’s’).dt.strftime(‘%Y-%m-%dT%H:%M:%S.%f’).str[:-3] + ‘Z’ You can make out from the format that it needs it to be like this: “2020-07-12T00:01:34.385Z” so that it can be ingested using the tool. The data which is supplied directly in the CSV before transformation looks like this: “1.5945120943859746E9”. Next, you must create the container within your GridDB Cloud. For this part, you can use the GridDB Cloud CLI tool or simply use the GridDB Cloud UI. The schema we want to ingest is found within the schema.json file in the directory. { “container”: “device1”, “containerType”: “TIME_SERIES”, “columnSet”: [ { “columnName”: “ts”, “type”: “timestamp”, “notNull”: true }, { “columnName”: “co”, “type”: “double”, “notNull”: false }, { “columnName”: “humidity”, “type”: “double”, “notNull”: false }, { “columnName”: “light”, “type”: “bool”, “notNull”: false }, { “columnName”: “lpg”, “type”: “double”, “notNull”: false }, { “columnName”: “motion”, “type”: “bool”, “notNull”: false }, { “columnName”: “smoke”, “type”: “double”, “notNull”: false }, { “columnName”: “temp”, “type”: “double”, “notNull”: false } ], “rowKeySet”: [ “ts” ] } To create the table with the tool, simply run: $ griddb-cloud-cli create schema.json. If using the UI, simply follow the UI prompts and follow the schema as shown here. Name the container device1. Once the container is ready to go, you can run the import tool: $ ./griddbCloudDataImport.sh device1 device1.csv. You can also use the GridDB Cloud CLI Tool to import: $ griddb-cloud-cli ingest device1.csv. Running The Sample Code (Java) Now that we have device1 defined and populated with data, let’s try running our sample code. $ cd sample-code/java $ export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk-23.jdk/Contents/Home $ mvn clean package And once the code is compiled, you need to set up your database parameters as your environment variables, and then from there, run the code: export GRIDDB_NOTIFICATION_PROVIDER=”[notification-provider-address]” export GRIDDB_CLUSTER_NAME=”[clustername]” export GRIDDB_USERNAME=”[username]” export GRIDDB_PASSWORD=”[password]” export GRIDDB_DATABASE=”[database name]” $ java -jar target/java-samples-1.0-SNAPSHOT-jar-with-dependencies.jar Running the sample code will run all of the java code, which includes connections to both JDBC and the NoSQL Interface. Here’s a quick breakdown of the Java Sample files included with this repo: The code in App.java simply runs the main function and all of the methods within the individual classes. The code in Device.java is the class schema for the dataset we ingest The code in GridDB.java is the NoSQL interface, connecting, creating tables, and querying from the dataset ingested above. The code also shows multiput, multiget, and various aggregation, time sampling etc examples. GridDBJdb.java shows connecting to GridDB via the JDBC interface and also shows creating a table and querying a table. Also shows a GROUP BY RANGE SQL query. Running The Sample Code (Python) For the python code, you will need to first install the Python client. There are good instructions found in the docs page: https://docs.griddb.net/gettingstarted/python.html. The following instructions are for debian-based systems: sudo apt install default-jdk export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64 git clone https://github.com/griddb/python_client.git cd python_client/java mvn install cd .. cd python python3.12 -m pip install . cd .. And once installed, you will need to have the .jar files in your $CLASSPATH. To make it easy, we hve included the jars inside of the lib dir inside the python dir and a script to add the variables to your classpath: $ source ./set-env.sh $ echo $CLASSPATH Once you’ve got everything done, you will need your creds set up in your environment variables, similar to the Java section above. And from there, you can run the code: $ python3.12 main.py Web API (Basic Authentication vs. Bearer Tokens) The GridDB Web API is one of the methods of orchestrating your CRUD methods for your GridDB Cloud instance. Prior to this release, the method of authenticating your HTTP Requests to your GridDB Cloud was solely using something called Basic Authentication, which is the method of attaching your Username and Password to each web request paired with an IP filtering firewall. Though this method was enough to keep things secure up until now, the GridDB team’s release of utilizing Web Tokens greatly bolsters the safety in authentication strategy for GridDB Cloud. The Dangers of Basic Authentication Before I get into how to work the Bearer token into your work flow, I will showcase a simple example of why Basic Authentication can be problematic and lead to issues down the line. Sending your user/pass credentials in every request leaves you extremely vulnerable to man-in-the-middle attacks. Not only that, but there’s issues with sometimes servers keeping logs of all headers incoming into it, meaning your user:pass combo could potentially be stored in plaintext somewhere on some server. If you share passwords at all, your entire online presense could be compromised. I asked an LLM to put together a quick demo of a server reading your user and password combo. The LLM produced a server and a client; the client sends its user:pass as part of its headers and the server is able to intercept those and decode and store the user:pass in plaintext! After that, we send another request with a bearer token, and though the server can still read and intercept that, bearer tokens will naturally expire and won’t potentially expose your password which may be used in other apps or anything else. Here is the output of the server: go run server.go Smarter attacker server listening on http://localhost:8080 — NEW REQUEST RECEIVED — Authorization Header: Basic TXlVc2VybmFtZTpNeVN1cGVyU2VjcmV0UGFzc3dvcmQxMjM= !!! ð± BASIC AUTH INTERCEPTED !!! !!! DECODED: MyUsername:MySuperSecretPassword123 — NEW REQUEST RECEIVED — Authorization Header: Bearer eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJzdWIiOiJpc3JhZWwiLCJleHAiOjE3NjE3NjA3NjZ9.fake_signature_part I think this example is clear, sending your password in every request can cause major leakage of your secret data. This is bad! Bearer Tokens As shown in the example above, a Bearer token is attached to the requests’s header, similar to the Basic Auth method, but it’s labeled as such (Bearer) and the code itself is a string that must be decoded and decrypted by the server. And though, as explaiend above, you do face a similar threat of somebody stealing your bearer token and being able to impersonate you, this damage is mitigated because the bearer token expires within an hour and doesn’t potentially leak information outside of the scope of this database; not to mention, a bearer token can have granular scopes to make limit damage even further. You can read more about them here, as we wrote about saving these into GridDB here: Protect your GridDB REST API with JSON Web Tokens Protect your GridDB REST API with JSON Web Tokens Part II How to use with GridDB Cloud This assumes you are familiar with GridDB Cloud in general and how to use the Web API. If you are not, please read the quickstart: GridDB Cloud Quickstart. To be issued an auth token from GridDB Cloud, you must use the web api endpoint: https://[cloud-id]griddb.com/griddb/v2/[cluster-name]/authenticate. You make a POST Request to that address with the body being your web api credentials (username, password). If successful, you will receive a JSON response with the access token string (the string you attach with your requests), and an expiry date of that token. From that point on, you can use that bearer token in all of your requests until it expires. Here’s a CURL example: curl –location ‘https://[cloud-id]griddb.com/griddb/v2/[cluster-name]/authenticate.’ –header ‘Content-Type: application/json’ –data ‘{ “username”: “israel”, “password”: “israel” }’ RESPONSE { “accessToken”: “eyJ0eXAiOiJBY2Nlc3MiLCJhbGciOiJIUzI1NiJ9.eyJzdWIiOiJTMDE2anA3WkppLWlzcmFlbCIsImV4cCI6MTc2MTc2MDk4MCwicHciOiJ1ZXNDZlBRaCtFZXdhYjhWeC95SXBnPT0ifQ.1WynKNIwRLM7pOVhAi9itQh35gUnxlzyi85Vhw3xM8E”, “expiredDate”: “2025-10-29 18:03:00.653 +0000″ } How to use the Bearer Token in an Application Now that you know how to use a bearer token, let’s take a look at a hands-on example of incorporating it into your application. In this case, we updated the GridDB Cloud CLI Tool to use bearer tokens instead of Basic Authentication. The workflow is as follows: the CLI tool will send each request with the bearer token, but before it does that, it first checks to see if a valid token exists. If it does, no problem, send the request with the proper header attached. If the token doesn’t exist or is expired, it will first use the user credentials (Saved in a config file), grab a new token, save the contents into a json file, and then now will be read and attached with each request until it expires again. First, we need to create a new type called TokenManager which will handle our tokens, including having methods for saving and loading the access string. type TokenManager struct { AccessToken string `json:”accessToken”` Expiration ExpireTime `json:”expiredDate”` mux sync.RWMutex `json:”-“` buffer time.Duration `json:”-“` } // Here create a new instance of our Token Manager func NewTokenManager() *TokenManager { m := &TokenManager{ buffer: 5 * time.Minute, } if err := m.loadToken(); err != nil { fmt.Println(“No cached token found, will fetch a new one.”) } return m } This struct will save our access token string into the user’s config dir in their filesystem and then load it before every request: func (m *TokenManager) saveToken() error { configDir, err := os.UserConfigDir() if err != nil { return err } cliConfigDir := filepath.Join(configDir, “griddb-cloud-cli”) tokenPath := filepath.Join(cliConfigDir, “token.json”) data, err := json.Marshal(m) if err != nil { return err } return os.WriteFile(tokenPath, data, 0600) } func (m *TokenManager) loadToken() error { configDir, err := os.UserConfigDir() if err != nil { return err } tokenPath := filepath.Join(configDir, “griddb-cloud-cli”, “token.json”) data, err := os.ReadFile(tokenPath) if err != nil { return err } return json.Unmarshal(data, &m) } And here is the code for checking to see if the bearer token is expired: func (m *TokenManager) getAndAddValidToken(req *http.Request) error { m.mux.RLock() needsRefresh := time.Now().UTC().After(m.Expiration.Add(-m.buffer)) m.mux.RUnlock() if needsRefresh { m.mux.Lock() if time.Now().UTC().After(m.Expiration.Add(-m.buffer)) { if err := m.getBearerToken(); err != nil { m.mux.Unlock() return err } } m.mux.Unlock() } m.mux.RLock() defer m.mux.RUnlock() req.Header.Add(“Authorization”, “Bearer “+m.AccessToken) req.Header.Add(“Content-Type”, “application/json”) return nil } And finally, here’s the code for actually grabbing a new bearer token and attached it to each HTTP Request sent by the tool: func (m *TokenManager) getBearerToken() error { fmt.Println(“— REFRESHING TOKEN —“) if !(viper.IsSet(“cloud_url”)) { log.Fatal(“Please provide a `cloud_url` in your config file! You can copy this directly from your Cloud dashboard”) } configCloudURL := viper.GetString(“cloud_url”) parsedURL, err := url.Parse(configCloudURL) if err != nil { fmt.Println(“Error parsing URL:”, err) return err } newPath := path.Dir(parsedURL.Path) parsedURL.Path = newPath authEndpoint, _ := parsedURL.Parse(“./authenticate”) authURL := authEndpoint.String() method := “POST” user := viper.GetString(“cloud_username”) pass := viper.GetString(“cloud_pass”) payloadStr := fmt.Sprintf(`{“username”: “%s”, “password”: “%s” }`, user, pass) payload := strings.NewReader(payloadStr) client := &http.Client{} req, err := http.NewRequest(method, authURL, payload) if err != nil { log.Fatal(err) } defer req.Body.Close() req.Header.Add(“Content-Type”, “application/json”) resp, err := client.Do(req) if err != nil { fmt.Println(“error with client DO: “, err) } CheckForErrors(resp) body, err := io.ReadAll(resp.Body) if err != nil { return err } if err := json.Unmarshal(body, &m); err != nil { log.Fatalf(“Error unmarshaling access token %s”, err) } if err := m.saveToken(); err != nil { fmt.Println(“Warning: Could not save token to cache:”, err) } return nil } func MakeNewRequest(method, endpoint string, body io.Reader) (req *http.Request, e error) { if !(viper.IsSet(“cloud_url”)) { log.Fatal(“Please provide a `cloud_url` in your config file! You can copy this directly from your Cloud dashboard”) } url := viper.GetString(“cloud_url”) req, err := http.NewRequest(method, url+endpoint, body) if err != nil { fmt.Println(“error with request:”, err) return req, err } tokenManager.getAndAddValidToken(req) return req, nil } As usual, source code can be found in the GitHub page for the tool

Project Overview A modern web application that transforms spoken descriptions into high-quality images using cutting-edge AI technologies. Users can record their voice describing an image they want to create, and the system will transcribe their speech and generate a corresponding image. What Problem We Solved Traditional image generation tools require users to type detailed prompts, which can be: Time-consuming for complex descriptions. Limiting for users with typing difficulties. Less natural than speaking. This project solution makes AI image generation more accessible through voice interaction. Architecture & Tech Stack This diagram shows a pipeline for converting speech into images and storing the result in the GridDB database: User speaks into a microphone. Speech recording captures the audio. Audio is sent to ElevenLabs (Scriber-1) for speech-to-text transcription. The transcribed text becomes a prompt for Imagen 4 API, which generates an image. The data saved into the database are: The audio reference and The image The prompt text Frontend Stack In this project, we will use Next.js as the frontend framework. Backend Stack There is no specific backend code because all services used are from APIs. There are three main APIs: 1. Speech-to-Text API This project utilizes the ElevenLabs API for speech-to-text transcription. The API is athttps://api.elevenlabs.io/v1/text-to-speech/. ElevenLabs also provides JavaScript SDK for easier API integration. You can see the SDK documentation for more details. 2. Image Generation API This project uses Imagen 4 API from fal. The API is hosted on https://fal.ai/models/fal-ai/imagen4/preview. Fal provides JavaScript SDK for easier API integration. You can see the SDK documentation for more details. 3. Database API We will use the GridDB Cloud version in this project. So there is no need for local installation. Please read the next section on how to set up GridDB Cloud. Prerequisites Node.js This project is built using Next.js, which requires Node.js version 16 or higher. You can download and install Node.js from https://nodejs.org/en. GridDB Sign Up for GridDB Cloud Free Plan If you would like to sign up for a GridDB Cloud Free instance, you can do so at the following link: https://form.ict-toshiba.jp/download_form_griddb_cloud_freeplan_e. After successfully signing up, you will receive a free instance along with the necessary details to access the GridDB Cloud Management GUI, including the GridDB Cloud Portal URL, Contract ID, Login, and Password. GridDB WebAPI URL Go to the GridDB Cloud Portal and copy the WebAPI URL from the Clusters section. It should look like this: GridDB Username and Password Go to the GridDB Users section of the GridDB Cloud portal and create or copy the username for GRIDDB_USERNAME. The password is set when the user is created for the first time, use this as the GRIDDB_PASSWORD. For more details, to get started with GridDB Cloud, please follow this quick start guide. IP Whitelist When running this project, please ensure that the IP address where the project is running is whitelisted. Failure to do so will result in a 403 status code or forbidden access. You can use a website like What Is My IP Address to find your public IP address. To whitelist the IP, go to the GridDB Cloud Admin and navigate to the Network Access menu. ElevenLabs You need an ElevenLabs account and API key to use this project. You can sign up for an account at https://elevenlabs.io/signup. After signing up, go to the Account section, and create and copy your API key. Imagen 4 API You need an Imagen 4 API key to use this project. You can sign up for an account at https://fal.ai. After signing up, go to the Account section, and create and copy your API key. How to Run 1. Clone the repository Clone the repository from https://github.com/junwatu/speech-image-gen to your local machine. git clone https://github.com/junwatu/speech-image-gen.git cd speech-image-gen cd apps 2. Install dependencies Install all project dependencies using npm. npm install 3. Set up environment variables Copy file .env.example to .env and fill in the values: # Copy this file to .env.local and add your actual API keys # Never commit .env.local to version control # Fal.ai API Key for Imagen 4 # Get your key from: https://fal.ai/dashboard FAL_KEY= # ElevenLabs API Key for Speech-to-Text # Get your key from: https://elevenlabs.io/app/speech-synthesis ELEVENLABS_API_KEY= GRIDDB_WEBAPI_URL= GRIDDB_PASSWORD= GRIDDB_USERNAME= Please look the section on Prerequisites before running the project. 4. Run the project Run the project using the following command: npm run dev 5. Open the application Open the application in your browser at http://localhost:3000. You also need to allow the browser to access your microphone. Implementation Details Speech Recording The user will speak into the microphone and the audio will be recorded. The audio will be sent to ElevenLabs API for speech-to-text transcription. Please, remember that the language supported is English. The code to save the recording file is in the main page.tsx. It uses a native media recorder HTML 5 API to record the audio. Below is the snippet code: const startRecording = useCallback(async () => { try { setError(null); const stream = await navigator.mediaDevices.getUserMedia({ audio: { echoCancellation: true, noiseSuppression: true, sampleRate: 44100 } }); // Try different MIME types based on browser support let mimeType = ‘audio/webm;codecs=opus’; if (!MediaRecorder.isTypeSupported(mimeType)) { mimeType = ‘audio/webm’; if (!MediaRecorder.isTypeSupported(mimeType)) { mimeType = ‘audio/mp4’; if (!MediaRecorder.isTypeSupported(mimeType)) { mimeType = ”; // Let browser choose } } } const mediaRecorder = new MediaRecorder(stream, { …(mimeType && { mimeType }) }); mediaRecorderRef.current = mediaRecorder; audioChunksRef.current = []; recordingStartTimeRef.current = Date.now(); mediaRecorder.ondataavailable = (event) => { if (event.data.size > 0) { audioChunksRef.current.push(event.data); } }; mediaRecorder.onstop = async () => { const duration = Date.now() – recordingStartTimeRef.current; const audioBlob = new Blob(audioChunksRef.current, { type: mimeType || ‘audio/webm’ }); const audioUrl = URL.createObjectURL(audioBlob); const recording: AudioRecording = { blob: audioBlob, url: audioUrl, duration, timestamp: new Date() }; setCurrentRecording(recording); await transcribeAudio(recording); stream.getTracks().forEach(track => track.stop()); }; mediaRecorder.start(1000); // Collect data every second setIsRecording(true); } catch (error) { setError(‘Failed to access microphone. Please check your permissions and try again.’); } }, []); The audio processing flow is as follows: User clicks record button â startRecording() is called. Requests microphone access via getUserMedia(). Creates MediaRecorder with optimal settings. Collects audio data in chunks. When stopped, create an audio blob and trigger transcription. The audio data will be saved in the public/uploads/audio folder. Below is the snippet code to save the audio file: export async function saveAudioToFile(audioBlob: Blob, extension: string = ‘webm’): Promise { // Create uploads directory if it doesn’t exist const uploadsDir = join(process.cwd(), ‘public’, ‘uploads’, ‘audio’); await mkdir(uploadsDir, { recursive: true }); // Generate unique filename const filename = `${generateRandomID()}.${extension}`; const filePath = join(uploadsDir, filename); // Convert blob to buffer and save file const arrayBuffer = await audioBlob.arrayBuffer(); const buffer = Buffer.from(arrayBuffer); await writeFile(filePath, buffer); // Return relative path for storage in database return `/uploads/audio/${filename}`; } The full code for the saveAudioToFile() function is in the app/lib/audio-storage.ts file. Speech to Text Transcription The transcribed text will be sent to ElevenLabs API for text-to-speech synthesis. The code to send the audio to ElevenLabs API is in the transcribeAudio() function. The full code is in the lib/elevenlabs-client.ts file. // Main transcription function export async function transcribeAudio( client: ElevenLabsClient, audioBuffer: Buffer, modelId: ElevenLabsModel = ELEVENLABS_MODELS.SCRIBE_V1 ) { try { const result = await client.speechToText.convert({ audio: audioBuffer, model_id: modelId, }) as TranscriptionResponse; return { success: true, text: result.text, language_code: result.language_code, language_probability: result.language_probability, words: result.words || [], additional_formats: result.additional_formats || [] }; } catch (error) { console.error(‘ElevenLabs transcription error:’, error); return { success: false, error: error instanceof Error ? error.message : ‘Unknown error’ }; } } Transcription Route The transcribeAudio() function is called when accessing the /api/transcribe route. This route only accepts the POST method and processes the audio file sent in the request body. The ELEVENLABS_API_KEY environment variable in the .env is used in the route to initialize the ElevenLabs client. export async function POST(request: NextRequest) { // Get audio file from form data const formData = await request.formData(); const audioFile = formData.get(‘audio’) as File; // Convert to buffer const arrayBuffer = await audioFile.arrayBuffer(); const audioBuffer = Buffer.from(arrayBuffer); // Initialize ElevenLabs client const elevenlabs = new ElevenLabsClient({ apiKey: apiKey }); // Convert audio to text const result = await elevenlabs.speechToText.convert({ file: audioBlob, modelId: “scribe_v1”, languageCode: “en”, tagAudioEvents: true, diarize: false, }); return NextResponse.json({ transcription: result.text, language_code: result.languageCode, language_probability: result.languageProbability, words: result.words }); } The route will return the following JSON object: { “transcription”: “Transcribed text from the audio”, “language_code”: “en”, “language_probability”: 0.99, “words”: [ { “start”: 0.0, “end”: 1.0, “word”: “Transcribed”, “probability”: 0.99 }, // … more words ] } The transcribed text serves as the input prompt for image generation using Imagen 4 from fal.ai, which creates high-quality images based on the provided text description. Image Generation The Fal API endpoint used is fal-ai/imagen4/preview. You must have a Fal API key to use this endpoint and set the FAL_KEY in the .env file. Please look into this section on how to get the API key. The Fal Imagen 4 image generation API is called directly in the /api/generate-image route. The route will create the image using the subscribe() method from the @fal-ai/client SDK package. export async function POST(request: NextRequest) { const { prompt, style = ‘photorealistic’ } = await request.json(); // Configure fal client fal.config({ credentials: process.env.FAL_KEY || ” }); // Generate image using fal.ai Imagen 4 const result = await fal.subscribe(“fal-ai/imagen4/preview”, { input: { prompt: prompt, // Add style to prompt if needed …(style !== ‘photorealistic’ && { prompt: `${prompt}, ${style} style` }) }, logs: true, onQueueUpdate: (update) => { if (update.status === “IN_PROGRESS”) { update.logs.map((log) => log.message).forEach(console.log); } }, }); // Extract image URLs from the result const images = result.data?.images || []; const imageUrls = images.map((img: any) => img.url || img); return NextResponse.json({ images: imageUrls, prompt: prompt, style: style, requestId: result.requestId }); } The route will return JSON with the following structure: { “images”: [ “https://v3.fal.media/files/panda/YCl2K_C4yG87sDH_riyJl_output.png” ], “prompt”: “Floating red jerry can on the blue sea, wide shot, side view”, “style”: “photorealistic”, “requestId”: “8a0e13db-5760-48d4-9acd-5c793b14e1ee” } The image data, along with the prompt and audio file path, will be saved into the GridDB database. Database Operation We use the GridDB Cloud version for saving the image generation, prompt, and audio file path. It’s easy to use and accessible using API. The container or database name for this project is genvoiceai. Save Data to GridDB We can save any data to the database therefore we need to define the data schema or structure. We will use the following data schema or structure for this project: export interface GridDBData { id: string | number; images: Blob; // Stored as base64 string prompts: string; // Text prompt audioFiles: string; // File path to audio file } In real-world applications, best practice is to separate binary files from their references. However, for simplicity in this example, we store the image directly in the database as a base64-encoded string. Before saving to the database, the image needs to be converted to base64 format: // Convert image blob to base64 string for GridDB storage const imageBuffer = await imageBlob.arrayBuffer(); const imageBase64 = Buffer.from(imageBuffer).toString(‘base64’); Please look into the lib/griddb.ts file for the implementation details. The insertData() function is the actual database insertion. async function insertData({ data, containerName = ‘genvoiceai’ }) { const row = [ parseInt(data.id.toString()), // ID as integer data.images, // Base64 image string data.prompts, // Text prompt data.audioFiles // Audio file path ]; const path = `/containers/${containerName}/rows`; return await makeRequest(path, [row], ‘PUT’); } Get Data from GridDB To get data from the database, you can use the GET request from the route /api/save-data. This route uses SQL query to get specific or all data from the database. // For specific ID query = { type: ‘sql-select’, stmt: `SELECT * FROM genvoiceai WHERE id = ${parseInt(id)}` }; // For recent entries query = { type: ‘sql-select’, stmt: `SELECT * FROM genvoiceai ORDER BY id DESC LIMIT ${parseInt(limit)}` }; For detailed code implementation, please look into the app/api/save-data/route.ts file. Server Routes This project uses Next.js serverless functions to handle API requests. This means there is no separate backend code to handle APIs, as they are integrated directly into the Next.js application. The routes used by the frontend are as follows: Route Method Description /api/generate-image POST Generate images using fal.ai Imagen 4 /api/transcribe POST Convert audio to text using ElevenLabs /api/save-data POST Save image, prompt, and audio data to GridDB /api/save-data GET Retrieve saved data from GridDB /api/audio/[filename] GET Serve audio files from uploads directory User Interface The main entry of the frontend is in the page.tsx file. The user interface is built with Next.js and its single-page applications with several key sections: Voice Recording Section: Large microphone button for audio recording. Transcribed Text Display: Shows the converted speech-to-text with language detection. You can also edit the prompt here before generating the image. Style Selection: A dropdown menu that allows users to choose different image generation styles, including photorealistic, artistic, anime, and abstract styles. Generated Images Grid: Displays created images with download/save options. Saved Data Viewer: Shows previously saved generations from the database. The saved data will be displayed in the Saved Data Viewer section, you can show and hide it by clicking the Show Saved button on the top right. Each saved entry will include the image, the prompt used to generate it, the audio reference, and the request ID. You can also play the audio and download the image. Future Enhancements This project is a basic demo and can be further enhanced with additional features, such as: User authentication and authorization for saved data. Image editing or customization options. Integration with other AI models for image generation. Speech recognition improvements for different languages. Currently, it supports only