import os

import pandas as pd

import numpy as np

import requests

import subprocess

from pathlib import Path

import zipfile

import kagglehub

import shutil

import json

import base64

import http

http.client.HTTPConnection.debuglevel = 0

from IPython.display import Image, display, HTML

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM, pipeline # Generative Model

import sentencepiece # Generative Model

import faiss # Search & Indexing

from sentence_transformers import SentenceTransformer #Retrieval Model

from collections import OrderedDict #Standardising Results

Introduction¶

In this article, we demonstrate an innovative use case involving GridDB and FAISS to implement a hybrid RAG (Retrieval-Augmented Generation) system. While open-source libraries like FAISS can index and search semantic similarities in text effortlessly, high-performance databases like GridDB can help narrow down to the desired subset of data using structured queries across large volumes of unstructured data. This combination of structured retrieval followed by semantic retrieval using FAISS reduces operational overhead and minimizes computational costs associated with mining large-scale unstructured data.

The dataset we use for this purpose is the Kaggle News Category Dataset. This dataset comprises news headlines and metadata sourced between 2012 and 2022, and has news snippets in various categories, including politics, wellness, and others. The dataset can be downloaded using the Python library 'kagglehub'. Refer to https://www.kaggle.com/datasets/rmisra/news-category-dataset/data to learn more ab out downloading the dataset using the 'Download' option. Below is a snapshot of the download option.

Image(filename='Kagglehub_Dataset.png',width=800, height=500)

Downloading the Dataset¶

# Download and get dataset folder path

dataset_dir = kagglehub.dataset_download("rmisra/news-category-dataset")

# Destination: your current working directory

dest_dir = os.getcwd()

# Copy all files from kagglehub's dataset dir to the current working dir

for filename in os.listdir(dataset_dir):

src_file = os.path.join(dataset_dir, filename)

dst_file = os.path.join(dest_dir, filename)

# Only copy if it's a file

if os.path.isfile(src_file):

shutil.copy(src_file, dst_file)

print(f"Copied {filename} to {dst_file}")

print("All files copied.")

# Path to the downloaded JSON file

json_path = "News_Category_Dataset_v3.json" # or whatever the filename is

# Load as a list of JSON objects (one per line)

with open(json_path, 'r', encoding='utf-8') as f:

data = [json.loads(line) for line in f]

# Convert to DataFrame

news_data = pd.DataFrame(data)

Standardizing and Subsetting Data for Ingestion (GridDB)¶

trending_categories = [

"WELLNESS", "TECH", "SCIENCE",

"TRAVEL", "STYLE & BEAUTY", "ENTERTAINMENT","POLITICS"]

news_trending = news_data[news_data['category'].isin(trending_categories)].copy()

news_trending['category'].value_counts()

category POLITICS 35602 WELLNESS 17945 ENTERTAINMENT 17362 TRAVEL 9900 STYLE & BEAUTY 9814 SCIENCE 2206 TECH 2104 Name: count, dtype: int64

Adding new columns - id and text to the dataframe ('id' - rowkey)¶

- Add a unique ID column (We create this for GridDB's row key requirement for collection containers)

- Create a combined text column (recommended for RAG embedding input)

- Then chunk and upload

news_trending = news_trending.reset_index(drop=True)

news_trending['id'] = news_trending.index + 1

news_trending['text'] = news_trending['headline'] + " — " + news_trending['short_description']

Connecting to GridDB¶

username = XX #Place your username here

password = XX #Place your password here

credentials = f"{username}:{password}"

encoded_credentials = base64.b64encode(credentials.encode()).decode()

print(f"Encoded credentials: Basic {encoded_credentials}")

#Construct an object to hold the request headers (ensure that you replace the XXX placeholder with the correct value that matches the credentials for your GridDB instance)

#header_obj = {"Authorization":"Basic dXNlck0wMWt3VzE0Y3Q6Q1BYZ2liNzg4","Content-Type":"application/json; charset=UTF-8","User-Agent":"PostmanRuntime/7.29.0"}

header_obj = {

"Authorization": f"Basic {encoded_credentials}", # Add encoded credentials here

"Content-Type": "application/json", # Optional; depends on API requirements

"charset": "UTF-8",

"User-Agent":"PostmanRuntime/7.29.0"

}

#Construct the base URL based on your GridDB cluster you'd like to connect to (ensure that you replace the placeholders in the URL below with the correct values

#that correspond to your GridDB instance)

base_url = 'https://[host]:[port]/griddb/v2/[clustername]/dbs/[database_name]/'

Generating Container Structures for use in GridDB¶

The following functions help in -

- mapping the datatypes of the columns in the dataframe to the corresponding datatype in GridDB.

- creating the container structure that needs to be passed to the Web API.

def map_dtype_to_griddb(dtype):

"""

Maps Pandas data types to GridDB data types.

"""

if pd.api.types.is_integer_dtype(dtype):

return "INTEGER"

elif pd.api.types.is_float_dtype(dtype):

return "DOUBLE"

elif pd.api.types.is_bool_dtype(dtype):

return "BOOL"

elif pd.api.types.is_datetime64_any_dtype(dtype):

return "LONG" # GridDB stores timestamps as LONG

else:

return "STRING"

def generate_griddb_data_obj(df, container_name="MyContainer", container_type="COLLECTION", rowkey=False):

"""

Generates a GridDB container data object for API request.

"""

columns = []

for col in df.columns:

griddb_type = map_dtype_to_griddb(df[col].dtype)

columns.append({"name": col, "type": griddb_type})

data_obj = {

"container_name": container_name,

"container_type": container_type,

"rowkey": rowkey,

"columns": columns

}

return data_obj

Creating Containers in GridDB¶

GridDB supports two types of containers namely Collections and Timeseries. While collections are ideal for storing key-value data similar to relational data tables, timeseries containers are optimized for time-stamped data like IoT and sensor data. In this case, we store the unstructured article text and related metadata in collection containers. Refer to this resource to learn more.

data_obj = generate_griddb_data_obj(news_trending, container_name="news_category_data")

#Set up the GridDB WebAPI URL

url = base_url + 'containers'

#Invoke the GridDB WebAPI with the headers and the request body

x = requests.post(url, json = data_obj, headers = header_obj)

Row-Registration & the Role of a rowkey in Collection Containers¶

Row Registration in GridDB is analogous to inserting data in relational SQL databases. Refer to the GridDB Data Model to learn more about row registration and to know how GridDB processes transactions.

# Parse the JSON string into a Python list of dictionaries

request_body_news_data = news_trending.to_json(orient='values')

#Setup the URL to be used to invoke the GridDB WebAPI to register rows in the container created previously

url = base_url + 'containers/news_category_data/rows'

#Invoke the GridDB WebAPI using the request constructed

#x = requests.put(url, data=request_body_news_data, headers=header_obj)

In GridDB, the rowkey is optimal but not essential. The rowkey acts as a unique identifier (similar to a primary key in relational data tables) in collections, but they are not strictly necessary.

However, having rowkey=true for a collection enables -

- Fast lookups

- Updates and deletes by key

- Better performance in structured querying

However, the rowkey can be explicitly disabled by setting rowkey=false when creating the container. This allows data to be stored without a unique identifier.

But setting the rowkey as False comes with the following trade-offs -

- No point lookup

- No updates or deletes

- Limited indexing and filtering performance

In addition to simple primary keys, GridDB also supports the use of composite primary keys similar to standard relational databases. Refer to this GridDB resource to learn more.

Data Ingestion into GridDB using the Chunking Strategy¶

def chunker(df, size=100):

"""Yield successive chunks of size `size` from DataFrame `df`."""

for start in range(0, len(df), size):

yield df.iloc[start:start + size]

for chunk_df in chunker(news_trending, size=100):

# Send request (PUT or POST depending on your API version)

#response = requests.put(url, headers=header_obj, json=request_body)

response = requests.put(url, headers=header_obj, data=chunk_df.to_json(orient='values'))

if response.status_code not in [200, 201]:

print(f"Failed chunk upload: {response.status_code} - {response.text}")

else:

print(f"Chunk uploaded successfully: {len(chunk_df)} rows")

Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 100 rows Chunk uploaded successfully: 33 rows

An Overview of the Hybrid RAG Approach¶

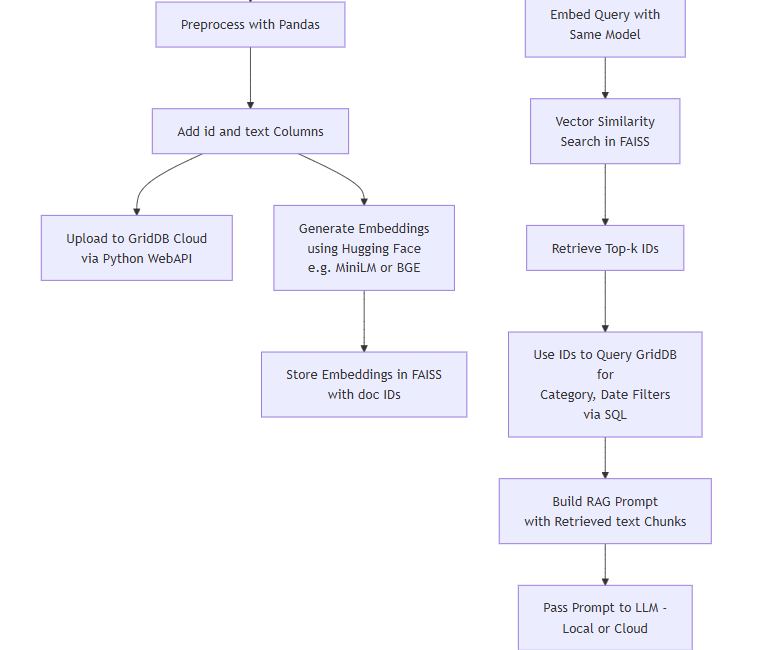

We use a Hybrid RAG Approach where the 1st layer involves structured retrieval using GridDB and the 2nd layer involves generating embeddings using an LLM (such as miniLM). The 3rd layer is the retrieval layer that is performed using FAISS. The resulting documents from the FAISS fed to the Generative model as the context. Below is a diagram showing the overall idea of the hybrid RAG Approach done using GridDB.

Image(filename='GridDB_Hugging_Face_RAG.png',width=800, height=500)

Scenario 1: Gathering Insights related to Politics and Travel¶

In this scenario, we combine semantic vector retrieval (via FAISS) with structured retrieval from GridDB to ensure factual grounding. This helps reduce LLM hallucinations.

Retrieval Layer 1: Implementing Structured Retrieval using GridDB (SQL-based)¶

sql_query1 = ("""

SELECT "date", CAST(SUBSTR("date", 1,4) AS INTEGER) AS news_year, category, text

FROM news_category_data

WHERE category IN ('TECH', 'POLITICS')

AND CAST(SUBSTR("date", 1,4) AS INTEGER) >=2020

""")

#Setup the URL to be used to invoke the GridDB WebAPI to retrieve data from the container

url = base_url + 'sql'

# Construct the request body

request_body = json.dumps([

{

"type": "sql-select",

"stmt": sql_query1

}

])

# Disable HTTP debugging

http.client.HTTPConnection.debuglevel = 0

#Invoke the GridDB WebAPI

data_req1 = requests.post(url, data=request_body, headers=header_obj)

#Process the response received and construct a Pandas dataframe with the data from the response

myJson = data_req1.json()

politics_tech_news = pd.DataFrame(myJson[0]["results"], columns=[myJson[0]["columns"][0]["name"], myJson[0]["columns"][1]["name"], myJson[0]["columns"][2]["name"], myJson[0]["columns"][3]["name"]])

Retrieval Layer-2: Implementing Vector-Based Retrieval and Indexing (MiniLM and FAISS)¶

# Initialize embedding model

model = SentenceTransformer('all-MiniLM-L6-v2')

# Step 1: Embed all documents

#texts = [item['text'] for item in news_trending]

#embeddings = model.encode(texts, show_progress_bar=True)

#embeddings = np.array(embeddings).astype('float32')

texts = politics_tech_news['text'].tolist() # Fix is here

embeddings = model.encode(texts, show_progress_bar=False)

embeddings = np.array(embeddings).astype('float32')

# Normalize for cosine similarity

faiss.normalize_L2(embeddings)

# Step 2: Build FAISS index

dimension = embeddings.shape[1]

index = faiss.IndexFlatIP(dimension) # Inner Product = cosine similarity on normalized vectors

index.add(embeddings)

# Function to run a query and retrieve top-k documents

def retrieve_docs(query, k=5):

q_emb = model.encode([query])

q_emb = np.array(q_emb).astype('float32')

faiss.normalize_L2(q_emb)

D, I = index.search(q_emb, k)

results = []

for idx in I[0]:

results.append(politics_tech_news.iloc[idx])

return results

# Example usage

query = "Which countries did political leaders visit?"

retrieved = retrieve_docs(query, k=400)

'''

print("Top matching documents:")

for doc in retrieved:

print(f"ID: {doc['id']} - Text: {doc['text']}\n")

'''

modules.json: 0%| | 0.00/349 [00:00<?, ?B/s]

C:\Users\mg_su\conda\anaconda3\Lib\site-packages\huggingface_hub\file_download.py:147: UserWarning: `huggingface_hub` cache-system uses symlinks by default to efficiently store duplicated files but your machine does not support them in C:\Users\mg_su\.cache\huggingface\hub\models--sentence-transformers--all-MiniLM-L6-v2. Caching files will still work but in a degraded version that might require more space on your disk. This warning can be disabled by setting the `HF_HUB_DISABLE_SYMLINKS_WARNING` environment variable. For more details, see https://huggingface.co/docs/huggingface_hub/how-to-cache#limitations. To support symlinks on Windows, you either need to activate Developer Mode or to run Python as an administrator. In order to activate developer mode, see this article: https://docs.microsoft.com/en-us/windows/apps/get-started/enable-your-device-for-development warnings.warn(message)

config_sentence_transformers.json: 0%| | 0.00/116 [00:00<?, ?B/s]

README.md: 0%| | 0.00/10.5k [00:00<?, ?B/s]

sentence_bert_config.json: 0%| | 0.00/53.0 [00:00<?, ?B/s]

config.json: 0%| | 0.00/612 [00:00<?, ?B/s]

model.safetensors: 0%| | 0.00/90.9M [00:00<?, ?B/s]

tokenizer_config.json: 0%| | 0.00/350 [00:00<?, ?B/s]

vocab.txt: 0%| | 0.00/232k [00:00<?, ?B/s]

tokenizer.json: 0%| | 0.00/466k [00:00<?, ?B/s]

special_tokens_map.json: 0%| | 0.00/112 [00:00<?, ?B/s]

config.json: 0%| | 0.00/190 [00:00<?, ?B/s]

'\nprint("Top matching documents:")\nfor doc in retrieved:\n print(f"ID: {doc[\'id\']} - Text: {doc[\'text\']}\n")\n'

Setting the Contextual Layer¶

# Convert 'text' column to list

context =''

count = 0

for doc in retrieved:

if count < 150:

context = context + "\n\n".join({doc['text']})

count = count + 1

#print(f"ID: {doc['id']} - Text: {doc['text']}\n")

Generative Layer: Building the RAG Prompt with the Retrieved Texts¶

os.environ["HF_HUB_DISABLE_SYMLINKS_WARNING"] = "1"

generator = pipeline("text2text-generation", model="google/flan-t5-base")

context = {context}

query = "Summarize the main political visits made by the president of the Unites States."

prompt = f"""

You are a helpful assistant. Use the following context to answer the question.

Context:

{context}

Question:

{query}

Answer:

"""

response = generator(prompt, max_new_tokens=200)

Standardizing the Results¶

raw_answer = response[0]['generated_text']

# Optionally split by commas and strip whitespace

country_list = [c.strip() for c in raw_answer.split(',')]

# Remove duplicates while preserving order

unique_countries = list(OrderedDict.fromkeys(country_list))

# Join back if you want to display it as a string

final_answer = ', '.join(unique_countries)

print("Cleaned Final RAG Answer:\n", final_answer)

Cleaned Final RAG Answer: President-elect visits Ukraine, Russia, Ukraine

Scenario 2: Gathering Insights related to Entertainment and Travel¶

Retrieval Layer-1: Structured Retrieval using GridDB¶

Here, we use fuzzy pattern matching operators such as 'LIKE' and filters such as IN to filter out data of interest. GridDB supports datatype conversion operators such as CAST and LOWER, and substring operators such as SUBSTR. Refer to this GridDB resource to know more.

sql_query2 = ("""

SELECT "date", category, text

FROM news_category_data

WHERE category IN ('ENTERTAINMENT', 'TRAVEL')

AND CAST(SUBSTR("date", 1, 4) AS INTEGER) >= 2020

AND (

LOWER(text) LIKE '%visit%' OR

LOWER(text) LIKE '%travel%' OR

LOWER(text) LIKE '%flew%' OR

LOWER(text) LIKE '%trip%' OR

LOWER(text) LIKE '%headed to%' OR

LOWER(text) = 'state' OR

LOWER(text) = 'states' OR

LOWER(text) LIKE '%summit%' OR

LOWER(text) LIKE '%conference%' OR

LOWER(text) LIKE '%meeting%'

);

""")

#Setup the URL to be used to invoke the GridDB WebAPI to retrieve data from the container

url = base_url + 'sql'

# Construct the request body

request_body = json.dumps([

{

"type": "sql-select",

"stmt": sql_query2

}

])

# Disable HTTP debugging

http.client.HTTPConnection.debuglevel = 0

#Invoke the GridDB WebAPI

data_req2 = requests.post(url, data=request_body, headers=header_obj)

#Process the response received and construct a Pandas dataframe with the data from the response

myJson = data_req2.json()

travel_entertainment_news = pd.DataFrame(myJson[0]["results"], columns=[myJson[0]["columns"][0]["name"], myJson[0]["columns"][1]["name"], myJson[0]["columns"][2]["name"]])

Retrieval Layer-2: Getting semantically relevant snippets¶

# Initialize embedding model

model = SentenceTransformer('all-MiniLM-L6-v2')

texts = travel_entertainment_news['text'].dropna().astype(str).tolist()

#texts = travel_entertainment_news['text'].tolist() # Fix is here

embeddings = model.encode(texts, show_progress_bar=False)

embeddings = np.array(embeddings).astype('float32')

# Normalize for cosine similarity

faiss.normalize_L2(embeddings)

# Step 2: Build FAISS index

dimension = embeddings.shape[1]

index = faiss.IndexFlatIP(dimension) # Inner Product = cosine similarity on normalized vectors

index.add(embeddings)

# Function to run a query and retrieve top-k documents

def retrieve_docs(query, k=5):

q_emb = model.encode([query])

q_emb = np.array(q_emb).astype('float32')

faiss.normalize_L2(q_emb)

D, I = index.search(q_emb, k)

results = []

for idx in I[0]:

results.append(travel_entertainment_news.iloc[idx])

return results

# Example usage

query = "List the actors mentioned in the articles"

retrieved = retrieve_docs(query, k=100)

'''

print("Top matching documents:")

for doc in retrieved:

print(f"ID: {doc['id']} - Text: {doc['text']}\n")

'''

'\nprint("Top matching documents:")\nfor doc in retrieved:\n print(f"ID: {doc[\'id\']} - Text: {doc[\'text\']}\n")\n'

#De-duping

#retrieved_unique = list({tuple(s): s for s in retrieved}.values())

Setting the Contextual Layer¶

# Convert 'text' column to list

context =''

count = 0

for doc in retrieved:

if count < 100:

context = context + "\n\n".join({doc['text']})

count = count + 1

#print(f"ID: {doc['id']} - Text: {doc['text']}\n")

Generative Layer¶

os.environ["HF_HUB_DISABLE_SYMLINKS_WARNING"] = "1"

model_name = "google/flan-t5-large"

# Load tokenizer without trying to convert to fast tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_name, use_fast=False)

model = AutoModelForSeq2SeqLM.from_pretrained(model_name)

generator = pipeline(

"text2text-generation",

model=model,

tokenizer=tokenizer

)

# Your retrieved context here (make sure you defined this earlier)

context = context

retrieval_query = "List only the celebrity names in the articles"

prompt = f"""

You are a helpful assistant. Use the following context to answer the question.

Context:

{context}

Question:

{retrieval_query}

Answer:

"""

# Generate response from the model

response = generator(prompt, max_new_tokens=200)

# Print the generated text answer

print("Final RAG Answer:\n", response[0]['generated_text'])

Device set to use cpu Token indices sequence length is longer than the specified maximum sequence length for this model (6478 > 512). Running this sequence through the model will result in indexing errors

Final RAG Answer: Rob Gronkowski, Dalvin Cook and Travis Kelce

Scenario 3: Gathering Insights related to Wellness, Style and Beauty¶

GridDB offers support for conditional logic and logical grouping similar to relational databases. Refer to this GridDB resource to learn more.

Retrieval Layer-1: Structured Retrieval using GridDB¶

sql_query3 = ("""SELECT

"date",

text,

CASE

WHEN LOWER(text) LIKE '%wellness%' THEN 'Wellness'

WHEN LOWER(text) LIKE '%coach%' THEN 'Coach'

WHEN LOWER(text) LIKE '%beauty%' THEN 'Beauty'`

WHEN LOWER(text) LIKE '%style%' THEN 'Style'

WHEN LOWER(text) LIKE '%skincare%' THEN 'Skincare'

WHEN LOWER(text) LIKE '%makeup%' THEN 'Makeup'

WHEN LOWER(text) LIKE '%brand%' THEN 'Brand'

ELSE 'Other'

END AS category

FROM news_category_data

WHERE CAST(SUBSTR("date", 1, 4) AS INTEGER) >= 2020

AND (

LOWER(text) LIKE '%wellness%'

OR LOWER(text) LIKE '%product%'`

OR LOWER(text) LIKE '%beauty%'

OR LOWER(text) LIKE '%style'

OR LOWER(text) LIKE '%skincare%'

OR LOWER(text) LIKE '%makeup%'

OR LOWER(text) LIKE '%brand%'

)

""")

#Setup the URL to be used to invoke the GridDB WebAPI to retrieve data from the container

url = base_url + 'sql'

# Construct the request body

request_body = json.dumps([

{

"type": "sql-select",

"stmt": sql_query3

}

])

# Disable HTTP debugging

http.client.HTTPConnection.debuglevel = 0

#Invoke the GridDB WebAPI

data_req3 = requests.post(url, data=request_body, headers=header_obj)

#Process the response received and construct a Pandas dataframe with the data from the response

myJson = data_req3.json()

style_beauty_news = pd.DataFrame(myJson[0]["results"], columns=[myJson[0]["columns"][0]["name"], myJson[0]["columns"][1]["name"], myJson[0]["columns"][2]["name"]])

Retrieval Layer-2: Semantic Retrieval and Indexing¶

# Initialize embedding model

model = SentenceTransformer('all-MiniLM-L6-v2')

# Step 1: Use only unique categories

categories = style_beauty_news['text'].dropna().astype(str).unique().tolist()

# Step 2: Embed categories

embeddings = model.encode(categories, show_progress_bar=False)

embeddings = np.array(embeddings).astype('float32')

# Normalize embeddings

faiss.normalize_L2(embeddings)

# Step 3: Build FAISS index

dimension = embeddings.shape[1]

index = faiss.IndexFlatIP(dimension)

index.add(embeddings)

# Step 4: Retrieval function

def retrieve_categories(query, k=5):

q_emb = model.encode([query])

q_emb = np.array(q_emb).astype('float32')

faiss.normalize_L2(q_emb)

D, I = index.search(q_emb, k)

return [categories[idx] for idx in I[0]]

# Example usage

query = "What are the top news headlines talking about skin and hair care?"

retrieved_categories = retrieve_categories(query, k=100)

#print(retrieved_categories)

#retrieved_categories

#De-duping

retrieved_unique = list({tuple(s): s for s in retrieved_categories}.values())

Setting the Context¶

context = "\n\n".join(retrieved_unique[:len(retrieved_unique)])

Generative Layer¶

os.environ["HF_HUB_DISABLE_SYMLINKS_WARNING"] = "1"

generator = pipeline("text2text-generation", model="google/flan-t5-base")

query = "What professions have been mentioned in news headlines about skin and hair care."

prompt = f"""

You are a helpful assistant. Use the following context to answer the question.

Context:

{context}

Question:

{query}

Answer:

"""

response = generator(prompt, max_new_tokens=200)

print(response[0]['generated_text'])

Device set to use cpu Token indices sequence length is longer than the specified maximum sequence length for this model (2923 > 512). Running this sequence through the model will result in indexing errors

Hairstylists, makeup artists, nail technicians and lash professionals

Scenario 4: Gather Articles related to Violence¶

Retrieval Layer-1: Selective Retrieval using GridDB¶

sql_query4 = ("""SELECT

SUBSTR("date", 6, 2) AS month,

SUBSTR("date", 1, 4) AS year,

text

FROM news_category_data

WHERE LOWER("text") LIKE '%violence%'

OR LOWER("text") LIKE '%shoot%'

OR LOWER("text") LIKE '%attack%'

OR LOWER("text") LIKE '%hit%'

OR LOWER("text") LIKE '%crime%'

AND CAST(SUBSTR("date", 1, 4) AS INTEGER) >= 2020

ORDER BY month

""")

#Setup the URL to be used to invoke the GridDB WebAPI to retrieve data from the container

url = base_url + 'sql'

# Construct the request body

request_body = json.dumps([

{

"type": "sql-select",

"stmt": sql_query4

}

])

# Disable HTTP debugging

http.client.HTTPConnection.debuglevel = 0

#Invoke the GridDB WebAPI

data_req4 = requests.post(url, data=request_body, headers=header_obj)

#Process the response received and construct a Pandas dataframe with the data from the response

myJson = data_req4.json()

violence_news = pd.DataFrame(myJson[0]["results"], columns=[myJson[0]["columns"][0]["name"], myJson[0]["columns"][1]["name"], myJson[0]["columns"][2]["name"]])

Retrieval Layer-2: Semantic Retrieval and Indexing¶

# Initialize embedding model

model = SentenceTransformer('all-MiniLM-L6-v2')

# Step 1: Use only unique categories

categories = violence_news['text'].dropna().astype(str).unique().tolist()

# Step 2: Embed categories

embeddings = model.encode(categories, show_progress_bar=False)

embeddings = np.array(embeddings).astype('float32')

# Normalize embeddings

faiss.normalize_L2(embeddings)

# Step 3: Build FAISS index

dimension = embeddings.shape[1]

index = faiss.IndexFlatIP(dimension)

index.add(embeddings)

# Step 4: Retrieval function

def retrieve_categories(query, k=5):

q_emb = model.encode([query])

q_emb = np.array(q_emb).astype('float32')

faiss.normalize_L2(q_emb)

D, I = index.search(q_emb, k)

return [categories[idx] for idx in I[0]]

# Example usage

query = "What are the top news headlines talking about violence?"

retrieved_categories = retrieve_categories(query, k=100)

#print(retrieved_categories)

#De-duping

retrieved_unique = list({tuple(s): s for s in retrieved_categories}.values())

Setting the Context¶

context = "\n\n".join(retrieved_unique[:len(retrieved_unique)])

Generative Layer¶

os.environ["HF_HUB_DISABLE_SYMLINKS_WARNING"] = "1"

generator = pipeline("text2text-generation", model="google/flan-t5-base")

query = "What are the snippets on gun violence and family violence?"

prompt = f"""

You are a helpful assistant. Use the following context to answer the question.

Context:

{context}

Question:

{query}

Answer:

"""

response = generator(prompt, max_new_tokens=200)

print(response[0]['generated_text'])

Device set to use cpu Token indices sequence length is longer than the specified maximum sequence length for this model (5460 > 512). Running this sequence through the model will result in indexing errors

HUFFPOLLSTER: Concerns About Gun Violence And Terrorism Spike After Orlando Attack — Just like they do after every major shooting or terrorism incident.

Concluding Remarks¶

In this article, we employed a novel approach that combines the power of GridDB with that of LLMs and FAISS to perform efficient retrieval and indexing, thereby improving LLMs' ability to generate accurate answers. The hybrid RAG pipeline involving GridDB improves the output of structured text analysis and improves the quality of the contextual layer being fed to the generative LLM. The ability to use SQL in GridDB makes it a useful tool to pre-select data to be fed to miniLM, which in turn helps FAISS search and index semantic data more effectively. Thus, GridDB can be seen as a valuable companion for unstructured text analysis and in lightweight LLM workflows.

GridDB is available as a free, open-source community edition and as an enterprise edition. Check out the official page to learn more.

If you have any questions about the blog, please create a Stack Overflow post here https://stackoverflow.com/questions/ask?tags=griddb .

Make sure that you use the “griddb” tag so our engineers can quickly reply to your questions.