Introduction

Today, we will cover how to scrape data from any website using Python’s library Scrapy. We will then save the data in a JSON and HTML file. Finally, we will see how we can also store this data in GridDB for long-term and efficient use.

Pre-requisites

This post requires the prior installation of the following:

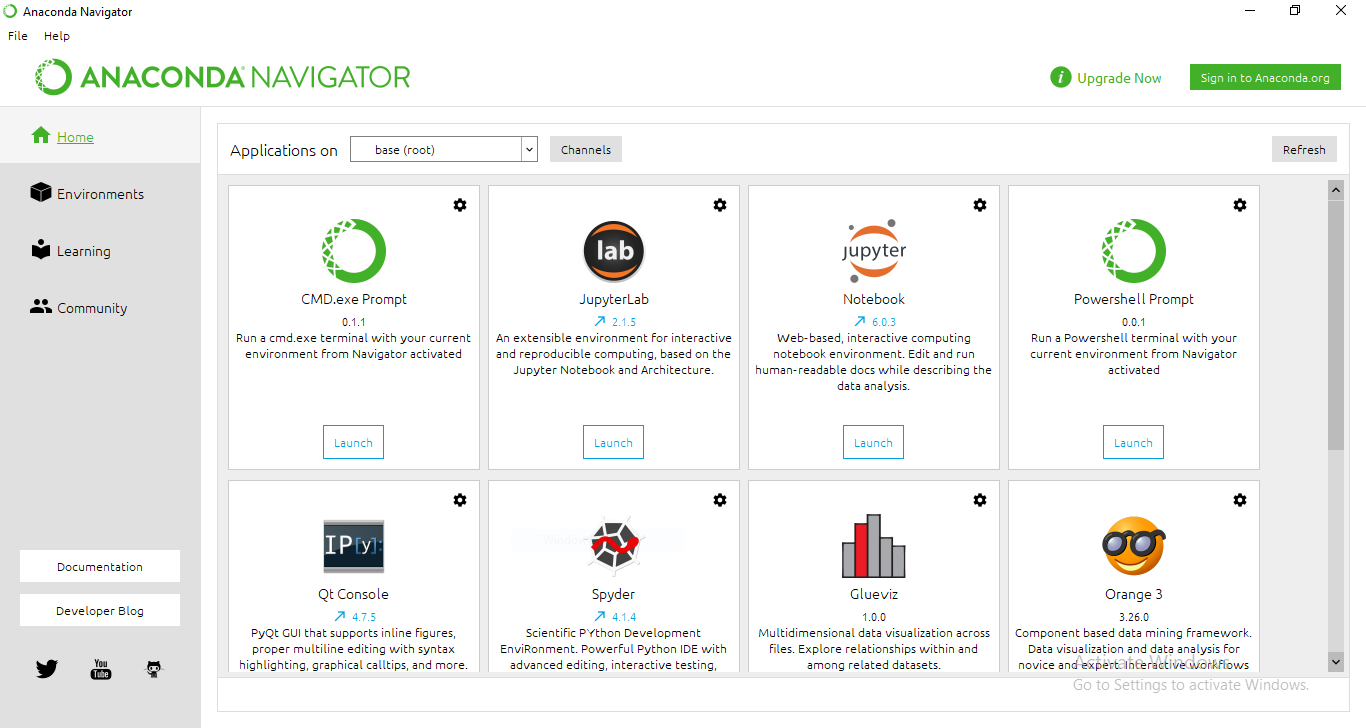

We also recommend installing Anaconda Navigator, if not already installed. Anaconda provides a large range of tools for data scientists to experiment with. Also, a virtual environment can help you meet the specific version requirements while running an application without interfering with the actual system paths.

Creating a new project using Scrapy

For this tutorial, we will be using Anaconda’s Command Line Interface and Jupyter Notebooks. Both of these tools can be found in the Anaconda Dashboard.

Creating a new project with scrapy is simple. Just type the following command within the directory you wish to create a new project folder in:

scrapy startproject griddb_tutorial

A new folder with the name griddb_tutorial is now created in the current directory. Let us look at the contents of this folder:

tree directory_path /F

Extracting data from a URL

Scrapy uses a class called Spider to crawl websites and extract information. We can write our custom code and mention initial requests inside this Spider class. For this tutorial, we will be scraping funny quotes from the website quotes.toscrape.com and storing the information in JSON format.

The following lines of code collect the information about the text, author, and tags associated with a quote.

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes_funny"

start_urls = [

'http://quotes.toscrape.com/tag/humor',

]

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').get(),

'author': quote.css('small.author::text').get(),

'tags': quote.css('div.tags a.tag::text').getall(),

}We will now save this python file in the /griddb_tutorial/spider directory. We execute a spider by providing its name to scrapy through the command line. Therefore, it is important to use a unique name for each spider.

Coming back to the home directory. Let’s run this spider now and see what we get –

scrapy crawl quotes_funnyIt takes some time to extract the data. Once the execution is completed, our output looks like –

DEBUG: Scraped from <200 http://quotes.toscrape.com/tag/humor/>

{'text': '"The reason I talk to myself is because I'm the only one whose answers I accept."', 'author': 'George Carlin', 'tags': ['humor', 'insanity', 'lies', 'lying', 'self-indulgence', 'truth']}

2021-05-29 21:29:44 [scrapy.core.engine] INFO: Closing spider (finished)Storing data into JSON

To store the previously crawled data, we modify the above command by simply passing an additional parameter –

scrapy crawl quotes_funny -O quotes_funny.jsonThis will create a new file named quotes_funny.json in the home directory. Note that the -O command overwrites any existing file with the same name. In case, you want to append new content to an existing JSON file, use -o instead.

The content of the JSON file will look like this:

[

{"text": "\u201cThe person, be it gentleman or lady, who has not pleasure in a good novel, must be intolerably stupid.\u201d", "author": "Jane Austen", "tags": ["aliteracy", "books", "classic", "humor"]},

{"text": "\u201cAll you need is love. But a little chocolate now and then doesn't hurt.\u201d", "author": "Charles M. Schulz", "tags": ["chocolate", "food", "humor"]},

{"text": "\u201cThe reason I talk to myself is because I\u2019m the only one whose answers I accept.\u201d", "author": "George Carlin", "tags": ["humor", "insanity", "lies", "lying", "self-indulgence", "truth"]}

]Storing data into GridDB

If you’re collecting continuous data over time, it is only a wise decision to store it in a Database. GridDB allows you to store time-series data and is specially optimized for IoT and Big Data. Its highly scalable nature gives room to both SQL and NoSQL interfaces. Follow their tutorial to get started.

We have our data collected from scrapy in a JSON file. Moreover, most websites allow you to export data in JSON format. Therefore, we will be writing a python script to load the data in our environment.

Reading data from a JSON file

import json

f = open('quotes_funny.json',)

data = json.load(f)

print(data[0])We will get an output similar to

{'text': '"The world as we have created it is a process of our thinking. It cannot be changed without changing our thinking."',

'author': 'Albert Einstein',

'tags': ['change', 'deep-thoughts', 'thinking', 'world']}To extract all the key-value pairs –

for d in data:

for key, value in d.items():

print(key, value)Now that we have extracted each key-value pair, let us initialize a GridDB instance.

GridDB container initialization

import griddb_python as griddb

factory = griddb.StoreFactory.get_instance()

# Container Initialization

try:

gridstore = factory.get_store(host=your_host, port=your_port,

cluster_name=your_cluster_name, username=your_username,

password=your_password)

conInfo = griddb.ContainerInfo("Dataset_Name",

[["attribute1", griddb.Type.STRING],["attribute2",griddb.Type.FLOAT],

....],

griddb.ContainerType.COLLECTION, True)

cont = gridstore.put_container(conInfo)

cont.create_index("id", griddb.IndexType.DEFAULT)

except griddb.GSException as e:

for i in range(e.get_error_stack_size()):

print("[", i, "]")

print(e.get_error_code(i))

print(e.get_location(i))

print(e.get_message(i))Fill in your custom details in the above code. Note that in our case, the data type is essentially a STRING. More information on data types supported by GridDB can be found here.

Insert data into the GridDB container

Our JSON file is a list of dictionaries. Each dictionary contains 3 attributes: Text, Author, and Tags. We will have to run two loops to get items under each category.

for d in data:

for key in d:

ret = cont.put(d[key])The final insertion script looks like this –

import griddb_python as griddb

factory = griddb.StoreFactory.get_instance()

# Container Initialization

try:

gridstore = factory.get_store(host=your_host, port=your_port,

cluster_name=your_cluster_name, username=your_username,

password=your_password)

conInfo = griddb.ContainerInfo("Dataset_Name",

[["attribute1", griddb.Type.INTEGER],["attribute2",griddb.Type.FLOAT],

....],

griddb.ContainerType.COLLECTION, True)

cont = gridstore.put_container(conInfo)

cont.create_index("id", griddb.IndexType.DEFAULT)

#Adding data to container

for d in data:

for key in d:

ret = cont.put(d[key])

except griddb.GSException as e:

for i in range(e.get_error_stack_size()):

print("[", i, "]")

print(e.get_error_code(i))

print(e.get_location(i))

print(e.get_message(i))Check out default cluster values on the official Github page of GridDB’s python-client.

Conclusion

In this tutorial, we saw how to create a spider in order to crawl data from a website. We stored the collected data in a JSON format so that it is easy to share among different platforms. Later on, we developed an insertion script for storing this data into GridDB.

Storing data in a database is crucial if you’re working on continuous data. It can be hard to store multiple JSON files in such a case. GridDB makes it easier to store every bit of information in one place. This saves time and helps team integrate without any hassle. Get started with GridDB today!

If you have any questions about the blog, please create a Stack Overflow post here https://stackoverflow.com/questions/ask?tags=griddb .

Make sure that you use the “griddb” tag so our engineers can quickly reply to your questions.